Immersive Design

affective | Interactive|

Production | Installation

Performance

Immersive Design

affective | Interactive|

Production | Installation

Performance

This area of my folio is focused on design for immersive experiences, interactions, installations and performance. It’s all about how things feel, not just what they do and how they work - this area is a specialty of mine.

Firstly, What is Affective Design?

Affective design for digital interaction and experience is focused on interpreting, mapping, interaction feedback systems and responsive mechanisms specifically honed for human emotion (affect) as a primary driver of experience - not as an afterthought.

Affective Design moves beyond purely functional or aesthetic considerations to embed emotional resonance as the core of interaction. My focus on Immersive-UX sits at the intersection of affective design psychology, gamification and interaction feedback-loop design.

Fundamentally, affective research and development focuses on building causal loop systems and environments that respond, adapt or anticipate emotional-resonance, either passively through careful tone-setting, or actively through real-time feedback.

Overview

Approach | methods | Projects

Overview

Approach | methods | Projects

An Overview of My Approach and FEature Projects

From founding Nurobodi (my digital wellbeing R&D startup), collaborating with CSIRO on Explainable AI (XAI) or developing immersive audiovisual design courses for RMIT, my practice demonstrates how affective design can bridge therapeutic applications, educational innovation and shared socio-cultural experiences. My work integrates:

Resonance theory - How audiovisual frequencies shape cognitive and emotional states

Interaction psychology - Understanding the affective qualities of user experience

Systems thinking - Designing feedback loops that respond or adapt to human needs

Practice-based research - Prototyping and testing in real-world contexts

Featured Projects

Below you'll find case studies spanning:

Research & Development - Nurobodi's affective AI and resonance research

Interactive Software Prototyping - SonoChroma keyboard and sonochromatic expression

Educational Innovation - RMIT's Transformative Colour-Resonance Environments course

Immersive Experiences - Digitising the Capitol Theatre for 360° XR experiences

Design/STEM Technical Innovation - RMIT VX Robotics Lab multi-screen array design

Production Design for Live Performance - Tony Yap Company's Mad Monk production design

Each project explores how intentional design of emotional experience can create deeper engagement, better outcomes, and more meaningful connection.

Scroll to explore ↓

Nurobodi

quantitative and qualitative

Affective Research

Nurobodi

quantitative and qualitative

Affective Research

Nurobodi

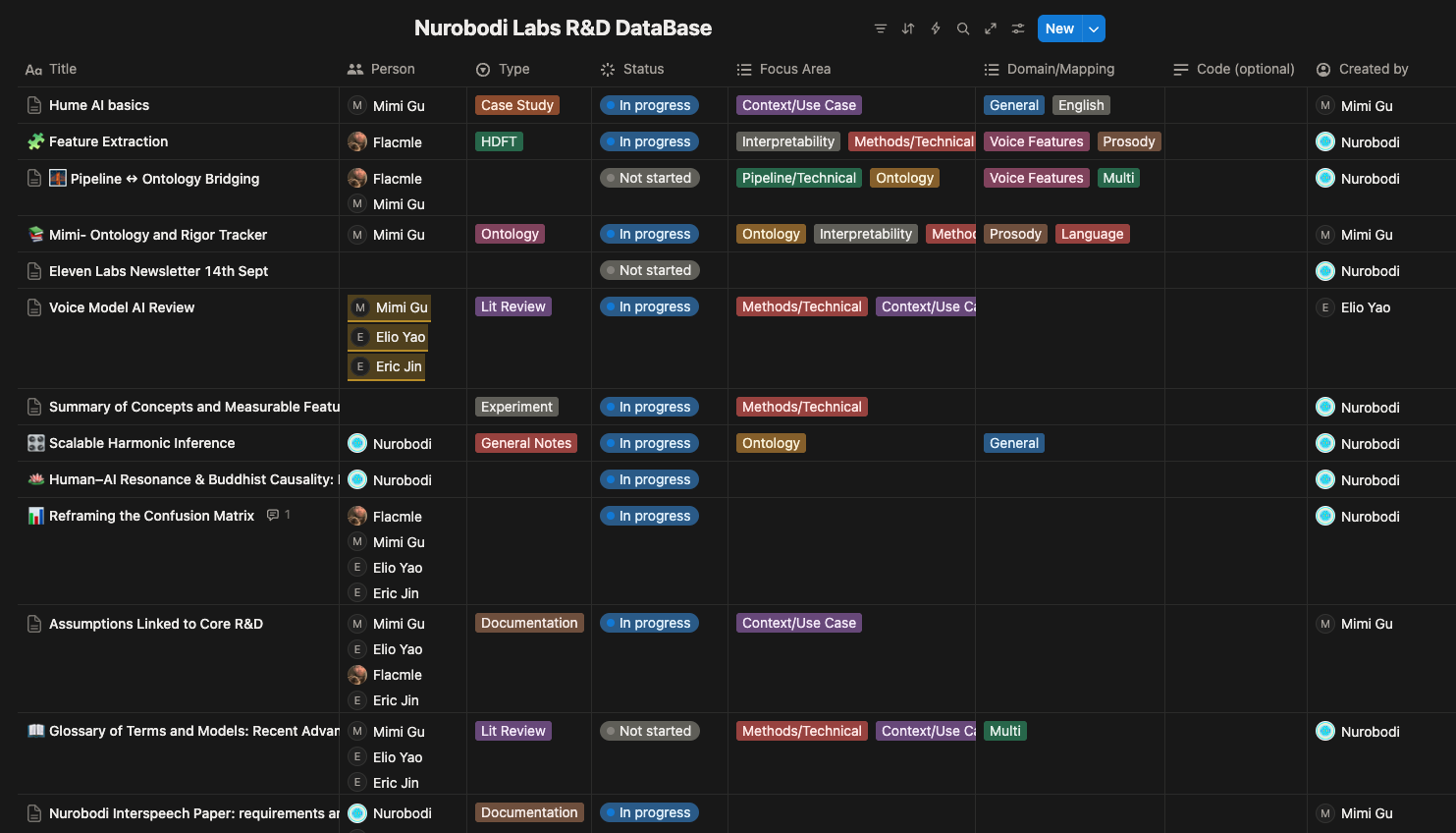

Affective Design Research | Digital Wellbeing R&D | Emotion-Aware AI

#AffectiveDesign #EmotionAI #UX #Interactive #SpatialDesign #Systems #DigitalWellbeing

Overview:

Nurobodi is my long-form research and development initiative exploring how adaptive audiovisual environments can support mental, emotional, and physical wellbeing. Founded in 2017, it bridges affective (emotional) design, sonic interaction, colour and sound perception psychology, and mindfulness to prototype interactive systems that facilitate emotional and cognitive alignment. The work is increasing exploring vocal-resonance as a key driver for integrative shifts of cognitive-somatic awareness.

Research Focus Areas

1. Affective Resonance & Sound-Colour Integration

Investigating how audiovisual frequencies—sound and light—interact to shape emotional and cognitive states.

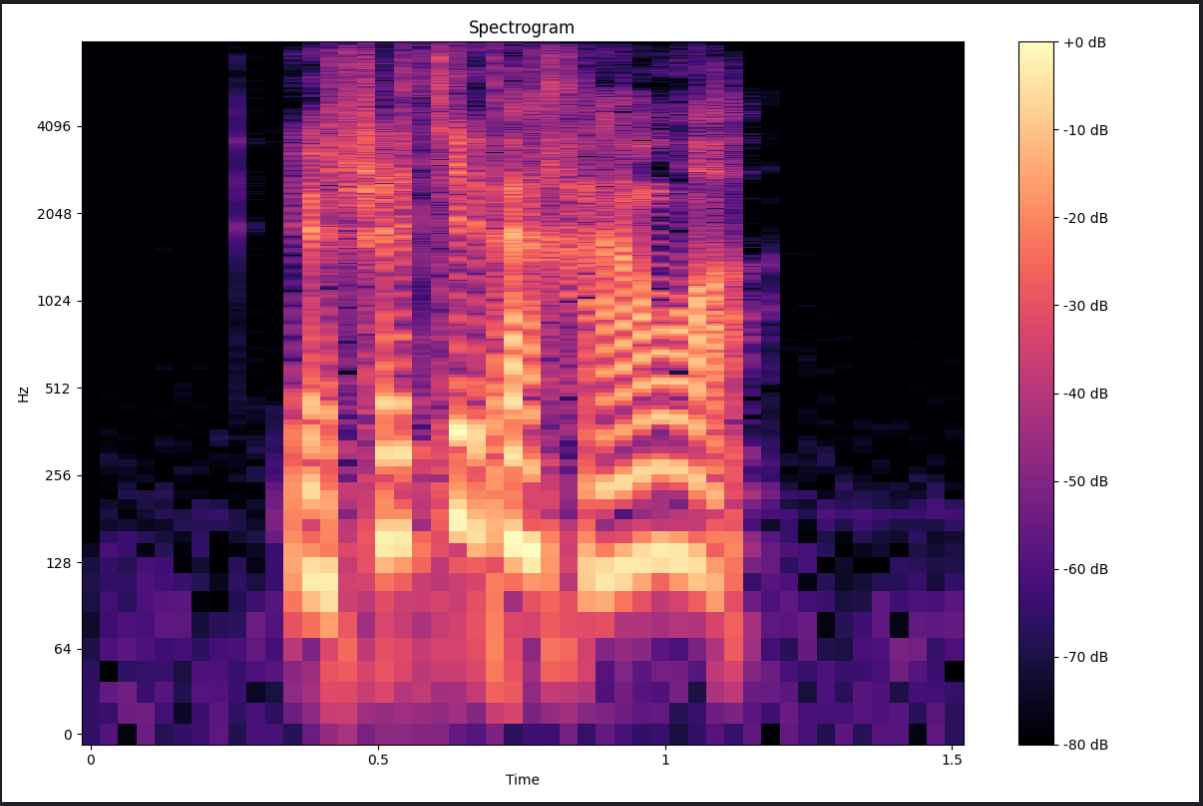

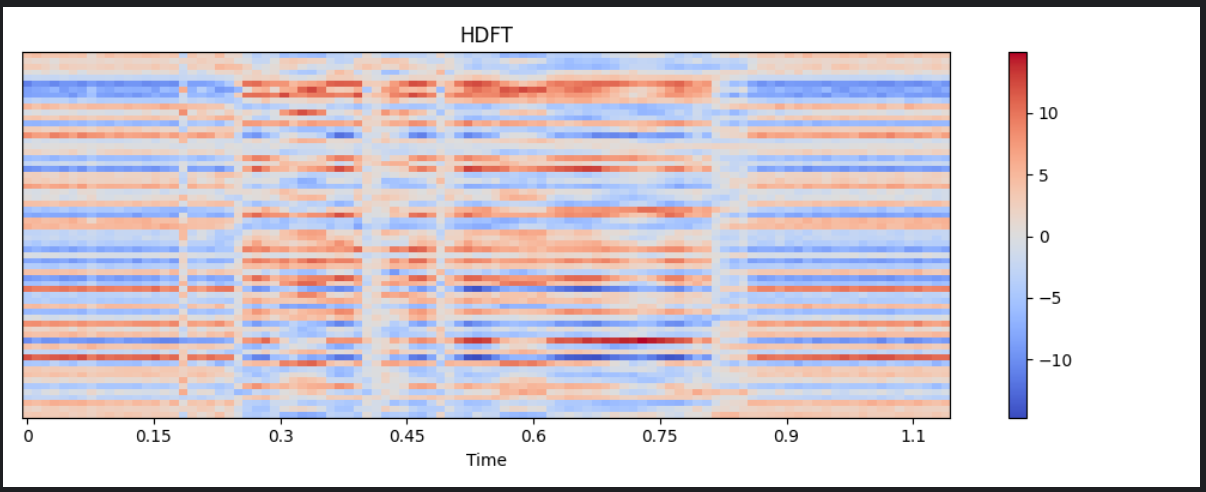

2. Paralinguistic AI & Emotion Recognition

Exploring how vocal cues (tone, prosody) can be analysed & synthesised to create more emotionally attuned AI interactions.

3. Human-in-the-Loop AI Design

Creating ethical frameworks for AI systems that keep humans central to decision-making processes.

4. Digital Wellbeing Applications

Translating affective design research into practical tools for mood regulation, stress reduction, and cognitive enhancement through interactive audiovisual environments.

5. Work Integrated Learning (WIL) Internships

Students engage in practice-based research within Nurobodi's active R&D projects, developing technical capabilities while contributing to innovation in affective computing and human-centered AI.

Key Nurobodi INdustry Collaborations

CSIRO Partnership: AMBER AI R&D Project

Led design and project management for AMBER—an ethical AI chatbot prototype developed in collaboration with CSIRO Data61. The project explored human-in-the-loop approaches to responsible AI, with a focus on explainable decision-making and emotional intelligence.

Dr. Justine Lacey, Research Director of Responsible Innovation at CSIRO:

"Cy's ability to synthesise complex ideas from our multidisciplinary teams at CSIRO and RMIT was instrumental in the development of AMBER... The UX design team at CSIRO Data61 were extremely impressed by Cy's work. The simple and intuitive user interface highlighted his mastery of human-centered design... He explored possibilities that could influence future industry standards for ethical AI applications."

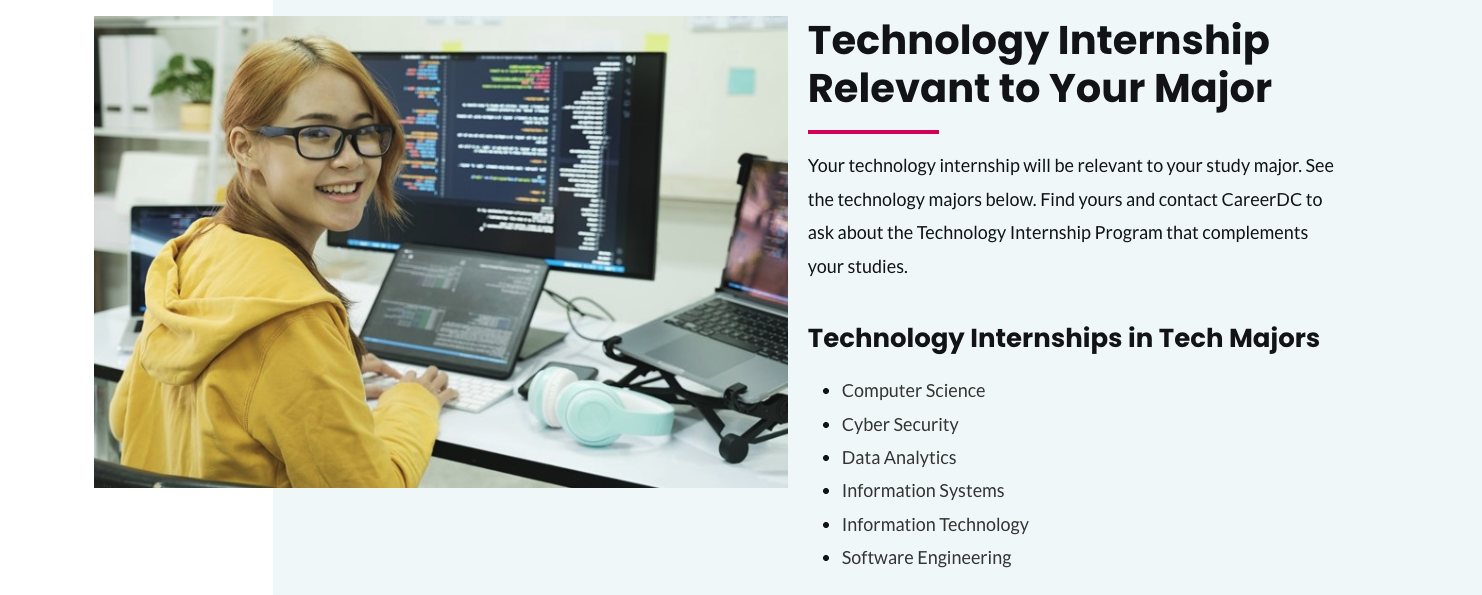

CareerDC Industry Internships: R&D Supervision

I’m currently partnering with CareerDC Internships for industry-based supervision of postgraduate students/researchers from multiple Universities in Australia including Melbourne University, UNSW, and Monash University across:

Prosodic and Vocalic Analysis

Data science

Biomedical and Pharma

Business analytics

This interdisciplinary research culture is advancing Nurobodi's core research in emotion recognition, affective AI, and therapeutic applications of resonance-based design.

Research Methodologies

AI Development Approach

Prosodic/Vocalic dataset curation - Ensuring responsible sourcing and diverse representation

Human-centered evaluation - Prioritizing human experience over pure technical performance

Iterative prototyping - Testing multiple data sets on emerging model architectures

Explainable AI (XAI) - Making AI decision-making transparent and understandable

Emerging Research for Downstream Applications & Use Cases

Therapeutic Contexts:

Mood regulation and stress reduction tools

Cognitive enhancement through audiovisual stimulation

Accessibility applications for neurodivergent users

Commercial Applications:

Customer experience design with emotional intelligence

AI voice assistants with prosodic awareness

Brand environments optimized for affective impact

Research Contributions:

Novel methodologies for emotion recognition

Frameworks for ethical AI development

Datasets for affective computing research

“This internship transformed my general perception of AI voice generation, which has far more applications from voice cloning. My impression of the upper bound of AI voice has been overturned — from the flattened, monotonous, robotic voice by typical text-to-speech models to emotionally rich and high-fidelity customisable humanlike voice that ultimately constitutes a user-AI interaction loop”

Nurobodi Key Takeaways

8+ years of dedicated research in affective design and affective (emotional) Human-Computer Interaction (HCI)

CSIRO-validated approach to human-centered industry-based AI design

Career-DC Industry Work integrated learning research and development supervision

Supervision of Design/STEM postgraduate researchers advancing interdisciplinary applications

Industry accelerator recognition from CSIRO and Generation AI

Practical applications spanning therapy, wellbeing, and accessibility

Nurobodi as an affective research, design and service development startup demonstrates that design can be both emotionally intelligent and ethically grounded—creating technology that serves human wellbeing, not extracting from it.

SonoChroma Software

Software | design | development

ProtoTyping | Chat Interface | gamification

SonoChroma Software

Software | design | development

ProtoTyping | Chat Interface | gamification

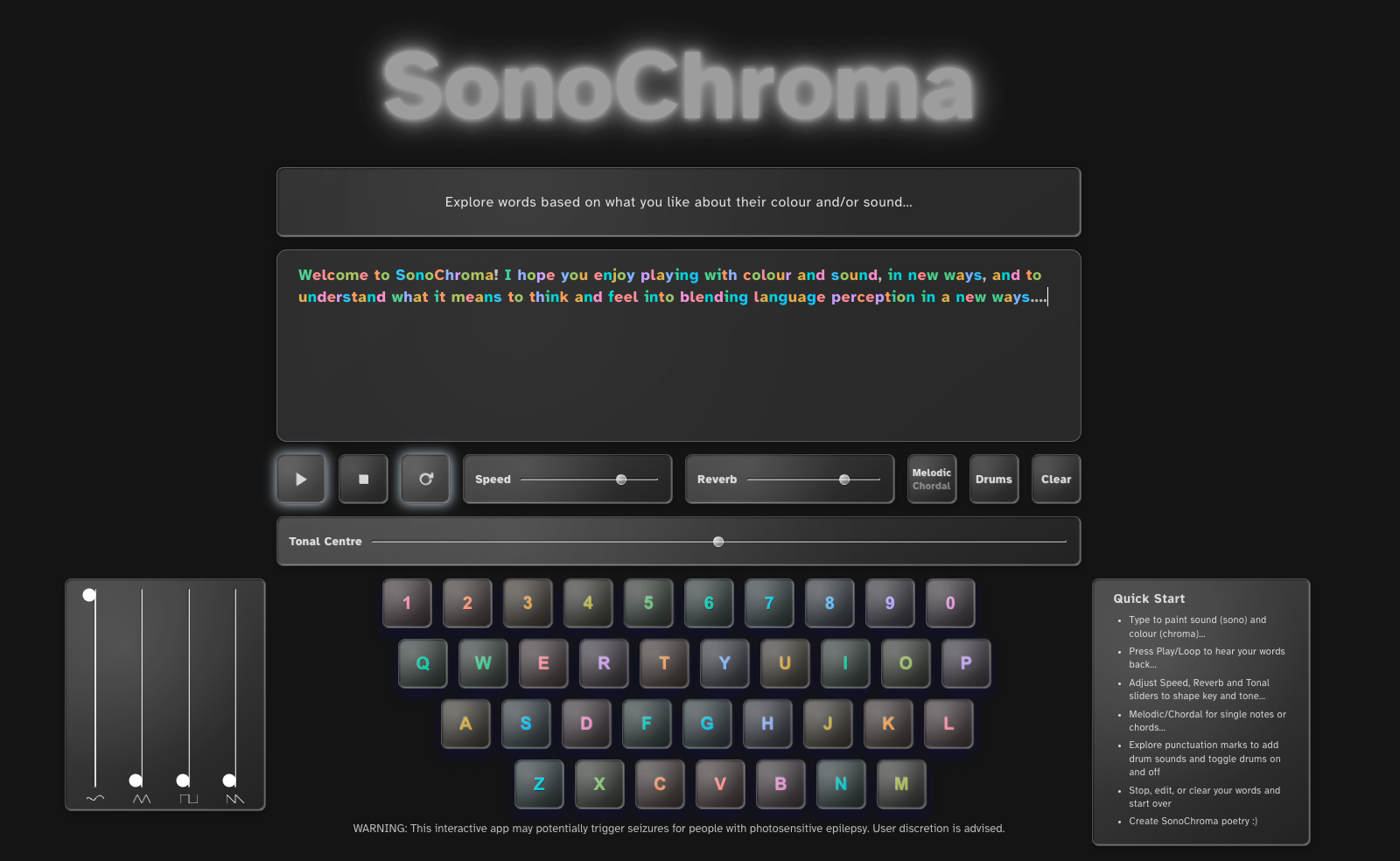

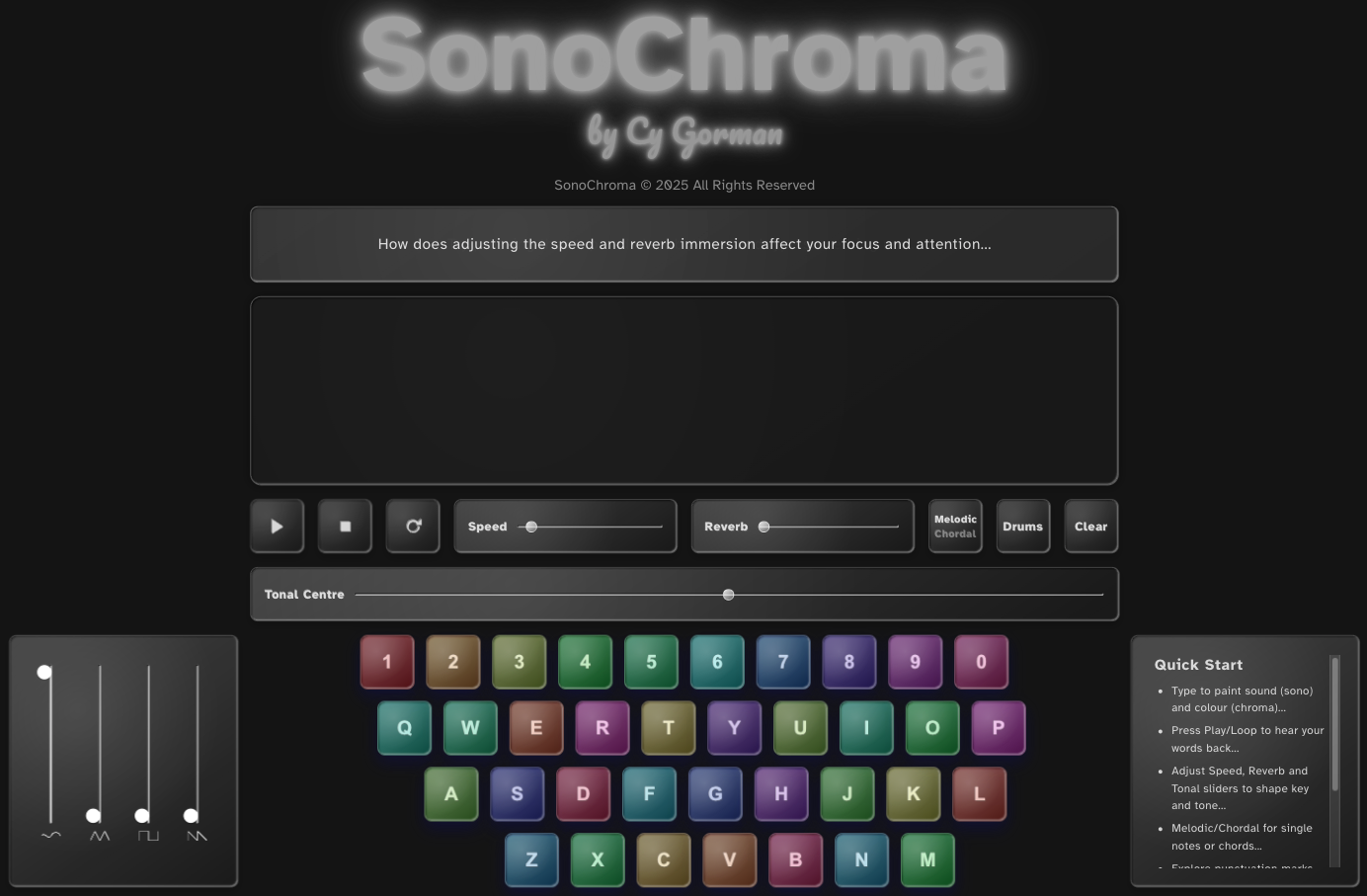

SonoChroma Keyboard

#AffectiveDesign #Accessibility #InteractiveDesign #SoundDesign #ColorTheory #Innovation #WebApplication

Interactive Software Prototype | Accessible Design Innovation | Sonochromatic Expression | Synaesthetics

The Concept

What if typing became music? What if words had colour? SonoChroma Keyboard is an interactive web-based application that transforms typed language into sonic and colored 'sonochromatic' expression. Each keystroke becomes both a character and a note—an act of real-time audiovisual composition. Words emerge as melodies and chords, with punctuation marks acting as percussive elements.

This research and development prototype explores how multi-sensory interaction can expand accessibility and create new forms of expressive communication.

The Challenge

Traditional accessibility design often treats sensory differences as deficits to compensate for. I ask: what if we flipped that model? What if users who navigate primarily through visual-sense or sound only weren't accommodated as an afterthought, but instead became power users of entirely new interaction paradigms? Combining the two as both discretely and independently important but also as novel design interdependencies positions the SonoChroma app accessibility as a generative design principle—not a compliance checkbox.

Beyond 1&0 2025 Vietnam - Digital Design Exhibition and Premiere of the Sonochroma App

How It Works

Gamified Real-Time Audiovisual Composition

Each letter triggers a specific musical note and color combination

Words become melodic sequences with chromatic accompaniment

Punctuation adds rhythmic elements (drum sounds, pauses, accents)

Typing speed and rhythm influence the musical phrasing

The result: Writing becomes performing—every message is a composition

Learning about Accessibility Through Synaesthesia

The system explores what happens when affective resonance guides meaning-making:

Users with visual impairments navigate through sound feedback

Users with hearing differences navigate through color cues

Neurodivergent users can express mood and tone through compositional choice

All users gain a multi-sensory dimension to text-based communication

Design Philosophy

Disability as Social Model

SonoChroma challenges the idea that "disability" exists in the user rather than in the design. By creating an interface where sound and color are primary, not supplementary, it asks:

What might users with sensory expertise teach us about interface design?

Could multimodal interaction become the default, not the accommodation?

How do we design for emotional expression, not just information transmission?

Progressive Design Thinking

Rather than retrofitting accessibility features, SonoChroma builds them into the core interaction model. This approach:

Centers diverse users in the design process

Explores novel interaction paradigms rather than replicating existing ones

Values affective communication alongside semantic meaning

Treats play and exploration as legitimate forms of interaction.

Beyond 1&0 2025 Vietnam - Digital Design Exhibition and Premiere of the Sonochroma App

Technical Innovation

Web-Based Architecture

Built as accessible web application (no proprietary platforms or downloads)

Responsive across devices (desktop, tablet, mobile)

Low bandwidth requirements for accessibility in varied contexts

Sound Design System

Real-time audio synthesis engine

Customizable instrument and scale options

Spatial audio capabilities for immersive experience

Adaptive dynamics based on typing patterns

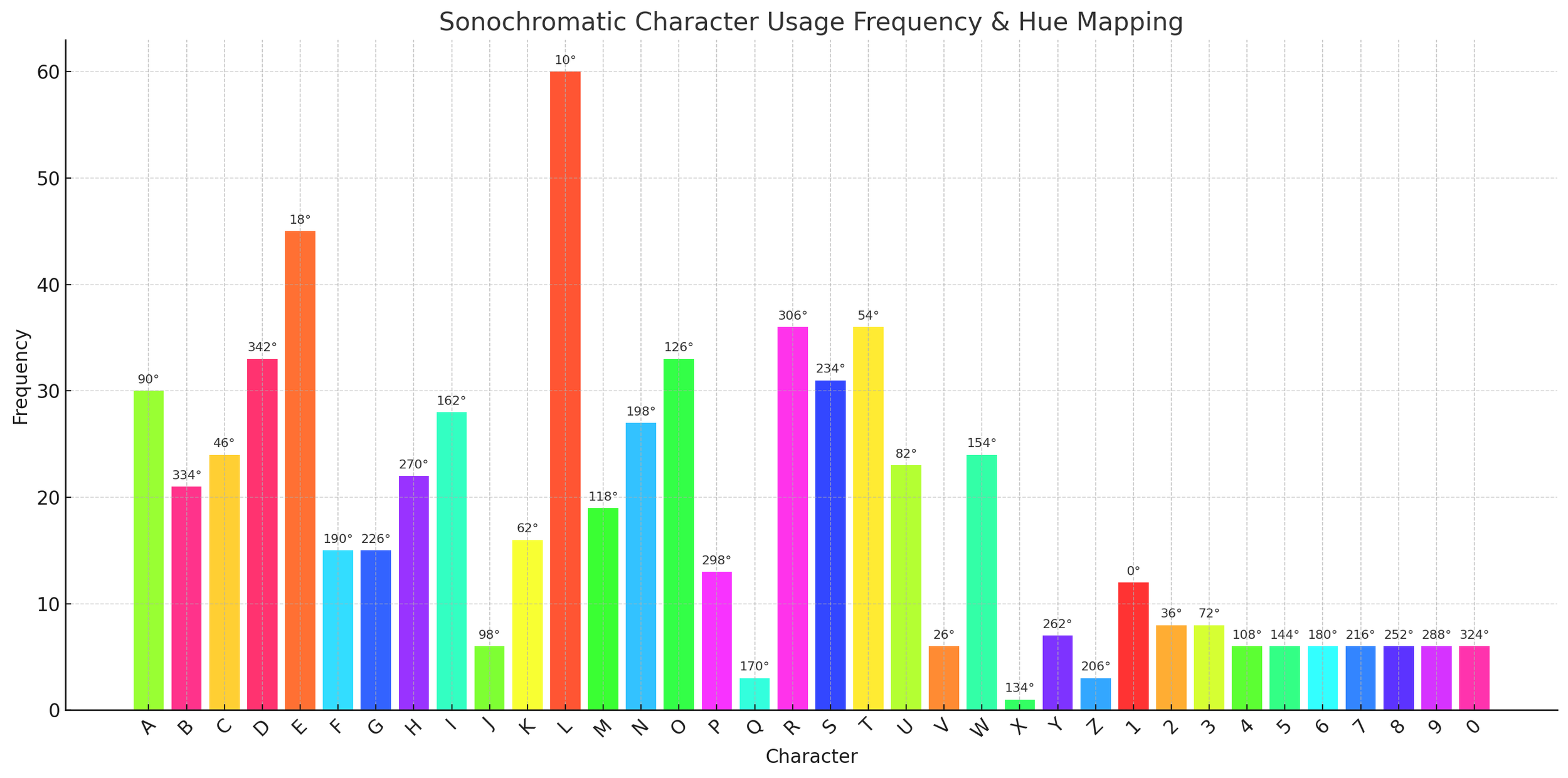

Colour Mapping Framework

Research-based color-emotion associations

HSL/RGB spectrum integration

Customizable palettes for user preference

High contrast modes for visual accessibility

Research Questions Explored

Beyond 1&0 2025 Vietnam - Digital Design Exhibition and Premiere of the Sonochroma App

Interaction Design:

How does multi-sensory feedback change typing behavior and emotional expression?

Can sonochromatic mapping create more emotionally nuanced digital communication?

What new forms of creative expression emerge from expanded input modalities?

Accessibility:

How do users with different sensory capacities experience and adapt the system?

Can "expert users" (those with sensory differences) guide future development?

What universal design principles emerge from accessibility-first prototyping?

Affective Design:

Does audiovisual feedback increase emotional awareness during communication?

How does the playful, musical quality affect user engagement and wellbeing?

Can affective interfaces reduce cognitive load while increasing expressiveness?

Future Development (Beyond Prototype)

Phase 1: Expanded User Testing (Current)

Conducting studies with diverse user groups

Gathering feedback from accessibility community

Iterating based on real-world usage patterns

Phase 2: Customisation & Personalisation

User-defined key mappings (notes, colours, sounds)

Preset "mood palettes" for different emotional contexts

Save and share compositions/settings

Phase 3: Communication Integration

Plugin versions for messaging platforms

Collaborative composition features (multi-user)

Integration with assistive technologies

Beyond 1&0 2025 Vietnam - Digital Design Exhibition and Premiere of the Sonochroma App

Impact & Insights

Beyond 1&0 2025 Vietnam - Digital Design Exhibition and Premiere of the Sonochroma App

Design Learnings

Accessibility can drive innovation, not constrain it

Play is a legitimate research methodology—exploration reveals unexpected insights

Affective feedback transforms functional interactions into meaningful experiences

Multi-sensory design benefits all users, not just those with accessibility needs

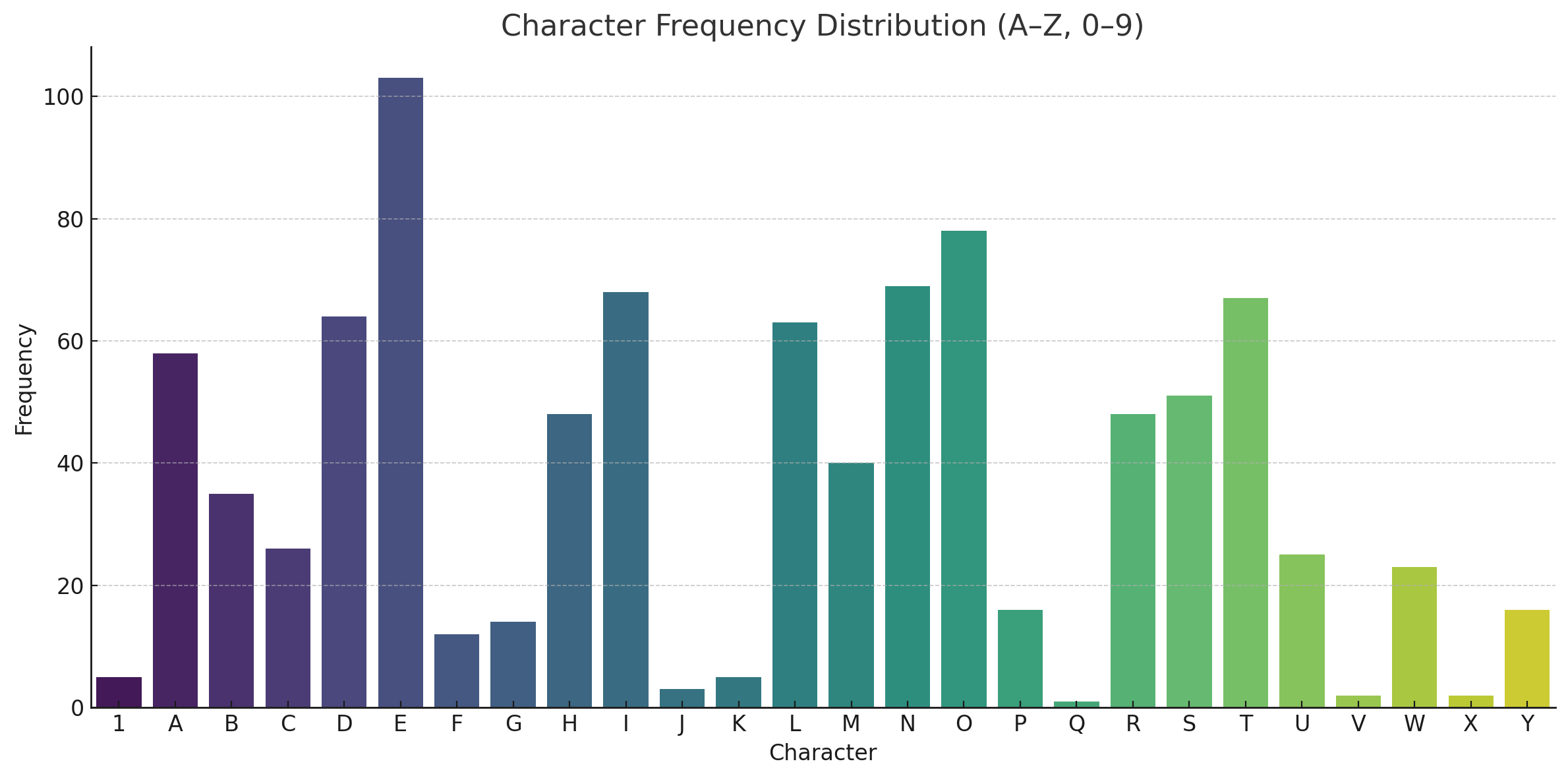

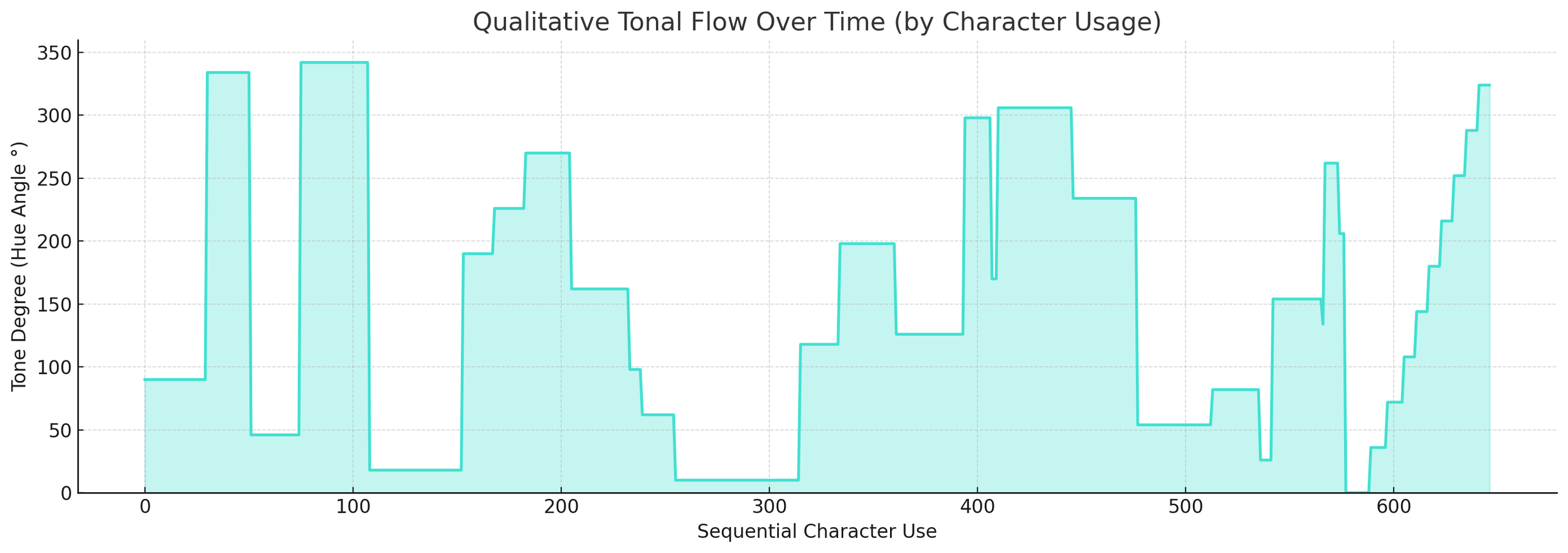

Affective Tokenomics structural analysis for key letters/words

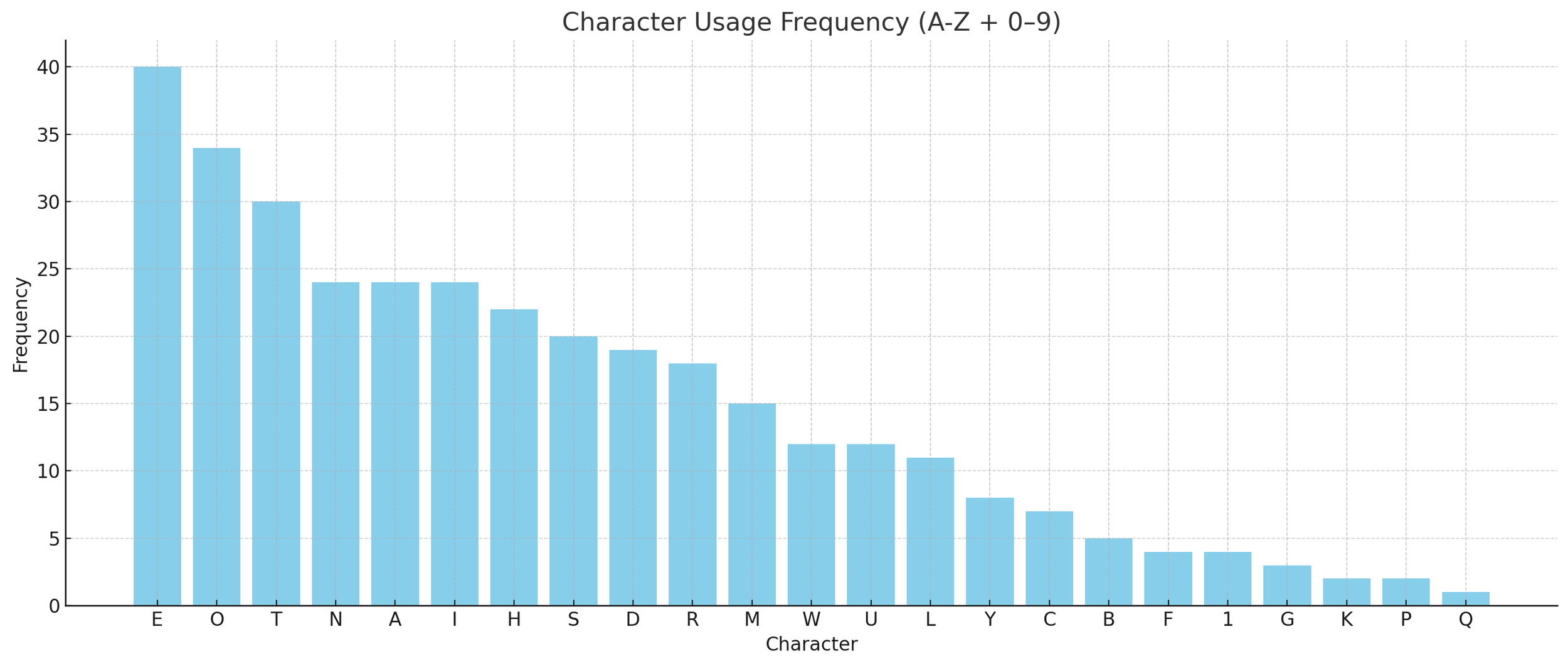

User Feedback

Increases mindfulness during typing—users become more aware of rhythm, pace, and emotional tone

Creates joyful interactions—the musical quality adds delight to mundane communication

Reveals new expressive possibilities—users discover they can "compose" mood through writing style

Democratises creative expression—no musical training required to create sonic experiences

Key Takeaways

Accessibility-first design that treats sensory diversity as creative opportunity

Interactive prototype demonstrating real-time sonochromatic expression

Research-driven approach exploring multimodal communication and affective feedback

Progressive design thinking challenging traditional accessibility models

Web-based and open—designed for broad access and iterative development

SonoChroma demonstrates that when we design for emotional resonance and sensory diversity from the start, we create richer experiences for everyone.

Try It Yourself (Desktop Prototype Only)

Transformative Colour Resonance Environments (TCRE)

audiovisual psychology | resonance research

Cultural semiotics | gamification | 3d design

immersive gallery curation and exhibition design

Transformative Colour Resonance Environments (TCRE)

audiovisual psychology | resonance research

Cultural semiotics | gamification | 3d design

immersive gallery curation and exhibition design

Transformative Colour-Resonance Environments (TCRE)

#AffectiveDesign #DesignPsychology #ColourPsychology #AudioVisualDesign DesignByResearch #ProductionDesign #ExhibitionDesign #DigitalMediaCuration #CulturalHeritage #Innovation #ProjectManagement #3D #Gamification

RMIT Course Design | Immersive Audiovisual Psychology | Affective Design Education | Practice-Based Research

Overview

TCRE is a pioneering 24-point course I designed from scratch as inaugural studio lead at RMIT University. It integrates affective design theory, colour psychology, sound design, interaction psychology and virtual exhibition curation, into a comprehensive research-led curriculum that pushes students to understand how audiovisual environments shape emotional and cognitive experience.

Innovative Assessment Design

Students are inspired to research, prototype, and contextualise how light, colour, and sound create transformative experiences. Individual user-research pathways are integrated within broader fields of UX/interaction design as technical and creative resonance and colour theory and research.

Deliverables & Student Work Examples

Publicly Accessible Virtual Galleries

Rather than traditional portfolios, students curate their work in 3D navigable exhibition spaces that serve multiple purposes:

Exhibition of final work - Immersive presentation of audiovisual outcomes

Process documentation - Visual research journey displayed spatially

Skills demonstration - Shows technical mastery of 3D environment design

Professional portfolio piece - Shareable, impressive showcase for future opportunities

Student Outcomes & Impact

Student testimonials highlight the course's profound impact:

"TCRE taught me transferable skills regarding colour, sound, and the interplay between them to shape environments/emotions. It definitely helped me develop my sensitivity to colour, sound and emotions and the incorporation of these attributes within design."

— TaiShang Sun, RMIT Alumni

"I learned practical and academic skills that have profoundly shaped my approach to design, including methods in colour psychology theories, semantic colour mapping, resonance analysis, and affective design principles and processes."

— Wunhao Li, 3D Artist / Game Designer

"After TCRE, I have a completely different understanding of colour as a medium of depth, not just surface value. Furthermore, I am now more confident in my knowledge and also feel more attuned to my surroundings."

— Nimuel Nghi Duong, Digital Designer

TCRE demonstrates that affective design education can be both academically rigorous and creatively liberating—equipping students with the research skills and emotional intelligence to design meaningful experiences.

Immersive Production Design

rmit | captiol theatre | Digitising Physical Environments

360 video design | vr | end-to-end video production

Immersive Production Design

rmit | captiol theatre | Digitising Physical Environments

360 video design | vr | end-to-end video production

Bridging Physical and Digital Environments

#AffectiveDesign #UX #Interactive #SpatialDesign #Research #XREnvironments #360Video #Innovation #ProjectManagement #AR #VR

Introduction

In an era where hybrid cinematic experiences are reshaping audience engagement, this project asked: How can we extend the cultural presence of historically significant venues like the Capitol Theatre into virtual realms, while also enabling student filmmakers to prototype within immersive production environments?

Responding to this challenge, students were embedded within a real-world simulation of a film production studio, assuming professional roles across all stages of the production pipeline. My role as Lecturer involved directing the project while serving as Director of Photography (DOP) and Production Manager — ensuring students navigated both the creative and technical complexities of XR filmmaking.

Overview

Digitising the Capitol Theatre in 360 was an immersive learning initiative that integrated cutting-edge volumetric capture technologies, cinematic storytelling, and extended reality (XR) postproduction workflows. The project formed part of a blended learning pipeline designed to upskill students in emerging media practices through a practice-based research framework. Using the Insta360 Pro 2 camera system, students worked collaboratively to reimagine Melbourne’s iconic Capitol Theatre as an experiential XR canvas.

Project Summary

The workshop unfolded in three distinct but interlinked phases, each designed to scaffold both technical competency and conceptual fluency:

1. Preproduction (Location-Specific)

Students visited the Capitol Theatre to scout the space, develop spatial storyboards, and identify key cinematic perspectives. This phase emphasized production design for 360° capture — a unique challenge requiring spatial choreography and non-linear thinking.

2. Production (On-Site Filming with Insta360 Pro 2)

Using the Insta360 Pro 2, students executed location shoots in 8K stereoscopic video, engaging in:

Camera rig operation and spatial blocking

Sound spatialisation and ambient audio capture

Data wrangling and file management in a multi-terabyte workflow

Students also learned to convert raw 8K footage to 4K for accessible postproduction editing.

3. Postproduction (Remote/Hybrid)

The final stage explored an innovative technique: embedding students’ standard UHD video projects within the Capitol Theatre’s virtual cinema screen. The result was a layered spatial montage — their own narrative short films premiered inside the virtual Capitol. This simulated the prestige of screening at an iconic venue, while maintaining a fully immersive 360° environment.

Deliverables

360° digital twin of the Capitol Theatre interior

Student-led immersive short films, premiered within a virtual theatre screen

Collaborative production logbooks documenting roles, workflows, and reflections

Exported immersive videos suitable for VR headsets and web-based XR platforms

Practice-based research reflections for each student, focused on role-based learning and spatial design insights

Key Insights

Spatial storytelling changes how students understand screen direction and audience agency. Working in 360 forced them to think beyond the frame and into spatial experience design.

Emergent pipelines require interdisciplinary coordination. The film production company model helped students understand the interplay between direction, DOP, postproduction, and digital asset management in new media formats.

Simulating real-world prestige environments creates affective motivation. The act of ‘screening’ their film within the virtual Capitol theatre had a powerful psychological effect — making the experience tangible and emotionally resonant.

Blended learning supports real-world readiness. The combination of in-location production and remote post workflows mirrors contemporary XR production pipelines used in the industry.

Digitising the Capitol Theatre in 360 not only introduced students to extended reality filmmaking but also provided a framework for collaboration, creative ownership, and spatial literacy in digital storytelling. The outcome was more than just immersive content — it was a generative learning environment, equipping students to prototype the future of cinema itself.

A Virtual Tour of the Capitol

This is an example of an RMIT alumni student work, Lucy Ryan, that captured a creative approach to an immersive audiovisual tour of the Capitol Theatre. It serves both as documentation and preservation of cultural place/space but also doubled as a medium for designers to explore augmentation and bridging of virtual and physical space in an immersive, interactive and there

Immersive Screen Environments

networked Systems Innovation |immersive INstallation

Data Visualisation | Distributed media networking

Immersive Screen Environments

networked Systems Innovation |immersive INstallation

Data Visualisation | Distributed media networking

RMIT VX Robotics Lab: Multi-Screen Array Innovation

Co-Design for Networked Systems | Technical Innovation | Interdisciplinary Design/STEM Collaboration

#UX #XR #Interdisciplinary #Strategy #Research #SpatialDesign #SystemsThinking #Innovation #ProjectManagement #EmpathyDesign

The Challenge

How do we expand the capabilities of advanced research facilities to serve both technical innovation and creative education?

RMIT's Virtual Experiences Laboratory (VXLab) featured a sophisticated tiled display technology—an array of networked screens capable of ultra-high-resolution visualization. While powerful for robotics research and engineering simulations, its potential for immersive design, collaborative creativity, and experiential learning remained unexplored.

The opportunity: Pioneer novel use cases that bridge technical capability with human-centered design.

Overview

Working directly with Dr. Ian Peake (Technical Manager, VXLab), I proposed and implemented innovative methods for the VX Lab's multi-screen array that extended its functionality beyond engineering applications into immersive audiovisual design, affective interaction research, and collaborative learning experiences.

My role: Strategic design lead, project manager, and technical coordinator—bridging creative objectives with existing technical constraints.

Innovation Objectives

Technical Expansion

Develop custom tools for synchronized multi-screen audiovisual content

Explore distributed, networked visualization beyond single-user workflows

Push the boundaries of resolution, scale, and synchronization capabilities

Create replicable frameworks others could build upon

Educational Integration

Transform research lab into teaching resource accessible to design students

Demonstrate applications beyond STEM disciplines (design, psychology, creative practice)

Enable student projects that wouldn't be possible with standard equipment

Build cross-disciplinary collaboration between design and engineering

Creative Exploration

Test immersive audiovisual installations at unprecedented scale

Prototype affective design experiments using distributed visual systems

Explore empathy design through composite identity visualization

Create experiences that blur boundaries between physical and digital space

Project Methodology

Collaborative Co-Design Process

Phase 1: Discovery & Ideation

Technical consultation with Dr. Peake on system capabilities and limitations

Brainstorming sessions identifying novel use cases

Prototyping workflows for multi-screen synchronization

Testing technical feasibility of proposed applications

Phase 2: Development & Testing

Custom tool development for ultra-high-resolution content distribution

Synchronization protocols for networked screen arrays

Student training on system operation and creative possibilities

Iterative refinement based on real-world usage

Phase 3: Implementation & Exhibition

Student-led projects utilizing the expanded capabilities

Public exhibitions demonstrating technical and creative outcomes

Documentation of workflows for future use

Knowledge transfer to VXLab staff and other educators

Key Applications Developed

1. Composite Empathy/Identity Mapping

Concept: Using the multi-screen array to create composite facial overlays exploring concepts of identity, empathy, and collective representation.

Technical Execution:

Individual portrait photographs mapped across multiple screens

Overlay algorithms blending facial features into composite identities

Real-time adjustments to explore different weighting and combinations

Ultra-high resolution allowing detailed facial feature analysis

Research Questions:

How do we perceive "averaged" faces across demographic groups?

Can visual composites help develop empathy for collective experiences?

What happens when individual identity is abstracted into group representation?

Outcomes:

Powerful visual tool for discussions of diversity, representation, identity

Student engagement with complex social concepts through design

Demonstration of technology serving humanistic inquiry

2. Distributed Audiovisual Affective Design

Concept: Immersive multi-screen environments using color, light, and sound to create emotionally resonant spaces.

Technical Execution:

Synchronized video playback across entire screen array

Spatial audio integration creating immersive soundscapes

Live performance/lecture delivery within the environment

Real-time content manipulation responding to user/audience input

Applications:

Lectures as experiences - transforming standard presentations into immersive events

Affective mood regulation - testing how large-scale audiovisual environments influence emotional states

Collaborative design critique - viewing student work at unprecedented scale and detail

Event design prototyping - simulating installations before physical production

3. UX Research Visualization at Scale

Concept: Displaying complex user journey maps, research data, and design processes across multiple screens for collaborative analysis.

Benefits:

Spatial organization - different screens for different user personas, journey stages, or data sets

Collaborative viewing - entire teams can view and discuss without crowding around single monitor

High detail retention - zoom into specific data points without losing overall context

Comparative analysis - side-by-side visualization of different design iterations

advancing Research for Immersive technology

The expanded VXLab capabilities now support:

For Industry Partners:

System design testing - complex combinations of systems, models, and data

Collaborative prototyping - distributed teams working on shared visualizations

High-resolution rendering - immersive audiovisual content at scale

Novel visualization networking - exploring new forms of data representation

For Research:

Multi-modal data display - integrating quantitative and qualitative research

Spatial organization of complex information - using physical space to structure cognitive understanding

Collaborative analysis - enabling group interpretation of research findings

Experimentation with scale and resolution - exploring how size affects perception and understanding

Student Learning Outcomes

Technical Skills

Operating advanced multi-screen visualization systems

Content creation for ultra-high-resolution displays

Networked system coordination and synchronization

Troubleshooting complex technical setups

Design Thinking

Designing for spatial, not just screen-based, experiences

Understanding how scale affects emotional and cognitive response

Collaborative design in shared physical-digital environments

Systems thinking for networked technologies

Professional Practice

Working within institutional technical constraints

Communicating across disciplinary boundaries (design ↔ engineering)

Managing complex projects requiring multiple stakeholders

Adapting creative visions to available resources

Institutional Impact

Expanded Facility Capabilities

Before this project, VXLab was primarily used for engineering visualization and robotics research. Now it serves:

Design students exploring immersive experiences

Psychology research on perception and emotion

Cross-disciplinary collaboration between STEM and creative faculties

Industry partnerships requiring large-scale visualization

Model for Interdisciplinary Innovation

This project demonstrated that:

Technical facilities can serve creative disciplines when approached collaboratively

Student projects can drive institutional innovation through novel use cases

Cross-faculty collaboration creates value beyond what individual departments could achieve

Agile, experimental approaches can expand capabilities without major infrastructure investment

Key Insights

Strategic Design Thinking in Action

The success of this project came from:

Deep listening - understanding technical constraints before proposing solutions

Collaborative framing - positioning design as complementary to, not competing with, engineering uses

Incremental innovation - starting small, proving value, expanding gradually

Documentation and knowledge transfer - ensuring innovations outlive individual projects

Bridging Technical and Creative Cultures

Lessons learned:

Speak both languages - understand technical specifications AND creative vision

Find mutual benefit - how does design use advance technical capabilities?

Manage expectations - creative ambition must align with technical reality

Build trust through delivery - prove concepts work before scaling up

Resource Constraints Drive Innovation

Working within limitations:

Can't change the hardware? Change how it's used.

Can't access expensive software? Develop custom solutions.

Can't dedicate facility full-time? Design workflows for shared use.

Can't predict all applications? Create flexible frameworks others can adapt.

Deliverables & Documentation

Custom Tools Developed:

Multi-screen content distribution system

Synchronization protocols for networked displays

Workflow documentation for future users

Training materials for students and staff

Student Projects:

Composite identity visualizations exploring empathy and representation

Immersive audiovisual environments for affective design research

Large-scale UX research mapping and collaborative analysis

Experimental spatial narratives using distributed screens

Knowledge Outputs:

Case studies documenting technical and creative processes

Best practices for cross-disciplinary collaboration

Frameworks for expanding research facility applications

Institutional memory ensuring sustainability beyond individual projects

Future Directions

Potential Expansions

Integration with motion tracking for responsive environments

Real-time generative content based on audience/user data

Cross-location networking (connecting VXLab with remote sites)

AR/VR integration creating hybrid physical-virtual experiences

Ongoing Applications

Annual student exhibitions utilizing the multi-screen array

Industry partner demonstrations and prototyping sessions

Research projects exploring perception, cognition, and emotion at scale

Continued tool development and workflow refinement

Key Takeaways

Interdisciplinary innovation bridging design, engineering, and research

Expanded institutional capability through creative reframing of existing resources

Student empowerment via access to advanced professional-grade systems

Collaborative model demonstrating value of cross-faculty partnerships

Sustainable impact through documentation and knowledge transfer

Strategic design thinking navigating technical constraints to achieve creative goals

The VXLab multi-screen project demonstrates that innovation doesn't always require new infrastructure—sometimes it requires new thinking about what existing resources can do.

Live interaction audiovisual Performance

Tony Yap COmpany | production design

sound design | lighting design | Live Audiovisual Performance

Live interaction audiovisual Performance

Tony Yap COmpany | production design

sound design | lighting design | Live Audiovisual Performance

Mad Monk - Production Design & Live AV Performance

Tony Yap Company | Technical Production Design | Affective Environment Design | Live Performance

#AffectiveDesign #LightingDesign #SoundDesign #AudioVisualDesign #ProductionDesign #LivePerformance #InterculturalArts #ImmersiveExperience

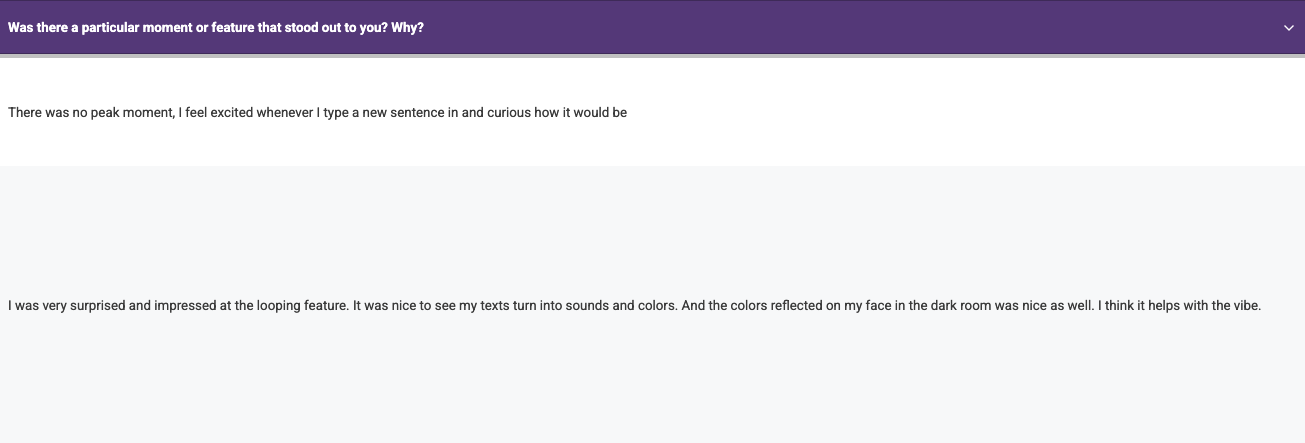

Overview

Mad Monk is a live performance work by acclaimed intercultural artist Tony Yap, featured as part of FRAME: A Biennial of Dance at Temperance Hall, Melbourne. I served as the production designer, creating an integrated audiovisual environment that amplified the emotional and spiritual dimensions of Yap's embodied practice.

This project demonstrates how affective design principles—sound, light, colour, spatial relationships—can create powerful performative experiences that move beyond spectacle into transformation.

About Tony Yap

Tony Yap is an internationally recognised multidisciplinary artist born in Malaysia, known for bringing non-Western perspectives to contemporary dance and performance. His practice is grounded in Asian philosophies, sensibilities, and forms, creating work that bridges cultural traditions with contemporary innovation.

Recognition:

Asialink residential grants spanning a decade

Australia Council for the Arts Dance Fellowship recipient

Founding Creative Director, Melaka Arts and Performance Festival (MAP Fest)

Significant contributor to intercultural discourse in contemporary performance

Yap's work embodies the intersection of movement, spirituality, and cultural inquiry—making him an ideal collaborator for exploring affective design in live performance contexts.

The Challenge

How do you create an audiovisual environment that honors traditional spiritual practices while serving contemporary performance innovation?

Mad Monk explores themes of meditation, embodiment, and spiritual seeking through Yap's physically demanding, emotionally raw performance practice. The design needed to:

Support rather than dominate - the environment should amplify Yap's presence, not compete with it

Create emotional resonance - light and sound as active participants in the affective journey

Bridge cultural contexts - honoring Asian aesthetic sensibilities while speaking to diverse audiences

Enable transformation - facilitate shifts in audience consciousness and emotional state

My Role: Production Designer

As production designer, I was responsible for all aspects of the audiovisual environment:

Lighting & Colour Design

Conceptualising and executing lighting states that mirror emotional and spiritual transitions

Color palette development reflecting affective intentions (warm/cool, saturated/muted, bright/shadow)

Spatial use of light creating intimacy, vastness, isolation, connection as needed

Real-time lighting adjustments responding to performance dynamics

Sound Design & Composition

Creating sonic landscapes that complement and contrast with silence

Frequency selection based on psychoacoustic principles (resonance, tension, release)

Spatial audio design for immersive audience experience

Live sound mixing responding to performer's energy and pacing

Performance Interaction Design

Designing cues and transitions that feel organic rather than technical

Creating visual-sonic moments that punctuate emotional shifts

Balancing planned design with improvisational responsiveness

Collaboration with performer on how environment supports movement vocabulary

Live AV Interaction Performance

Real-time operation of lighting, sound, and visual systems during performance

Responsive adjustments based on audience energy and performer needs

Maintaining technical precision while allowing for spontaneity

Embodied presence as co-performer through design decisions

Design Approach: Affective Environments

Light as Emotional Language

Rather than treating lighting as purely functional (illumination) or decorative, I approached it as an affective medium:

Warm light = groundedness, safety, embodiment, earth

Cool light = transcendence, distance, spiritual seeking, sky

High contrast = dramatic tension, duality, inner conflict

Soft diffusion = gentleness, vulnerability, opening

Sharp edges = intensity, focus, clarity, precision

These weren't arbitrary associations—they drew on both empirical color psychology research and cultural/archetypal meanings resonant with Yap's thematic concerns.

Sound as Spatial-Emotional Architecture

Sound design created:

Sonic containers - frequencies that hold and support emotional states

Textural variety - smooth/rough, continuous/punctuated, organic/synthetic

Spatial depth - near/far, surrounding/directional, intimate/vast

Silence as presence - strategic absence creating tension, anticipation, rest

The sound wasn't background—it was architecture for emotional experience.

Color Psychology in Action

Color choices were informed by research into affective color theory:

Red/Orange - activation, embodiment, passion, life force

Blue/Indigo - contemplation, spirituality, depth, mystery

White - emptiness, potential, clarity, transcendence

Shadow/Black - unknown, unconscious, void, possibility

These weren't "moods" imposed on the audience—they were invitations into emotional landscapes the performance explored.

Integration with Performer's Practice

Collaborative Process

Working with Tony Yap required:

Deep listening - understanding his movement vocabulary, spiritual references, artistic intentions

Cultural sensitivity - honoring Asian aesthetic principles (negative space, subtlety, restraint)

Improvisational readiness - responding in real-time to shifts in performance energy

Shared language - developing communication that bridged technical and artistic vocabularies

Design as Dialogue

The audiovisual environment wasn't imposed on the performance—it emerged through dialogue:

Yap's movement suggested color and sound possibilities

Design choices opened new movement pathways for Yap

Audience energy influenced both performer and design responses

The result: a living, breathing, co-created experience

Context: FRAME Biennial & ALIENS OF EXTRAORDINARY ABILITY

Mad Monk was presented within ALIENS OF EXTRAORDINARY ABILITY—a curated evening of performances exploring otherness, queerness, and transformative embodiment.

Curated by Luke George (Temperance Hall Artistic Associate), ALIENS was inspired by his experiences in Brooklyn's grassroots performance scene and takes its title from the artist visa required to work in the USA—reclaiming "othering" as celebration of electric, inclusive, spontaneous creative community.

This context mattered—the design needed to:

Honor Mad Monk's spiritual depth while existing within a queer, experimental, celebratory framing

Create intimacy within a multi-performance evening

Respect both traditional practice and radical contemporary expression

Outcomes & Impact

Audience Experience

The integration of performer, light, sound, and space created an immersive emotional journey:

Audiences reported feeling transported, moved, held

The environment supported rather than distracted from Yap's powerful physical presence

Color and sound transitions guided emotional pacing without feeling manipulative

Moments of darkness, silence, and stillness had as much impact as spectacle

Artistic Collaboration

For Tony Yap, the design:

Provided sonic and visual support for vulnerable, demanding physical work

Amplified emotional and spiritual dimensions of the performance

Created containers for transitions between states (grounded ↔ transcendent, active ↔ still)

Honored cultural roots while embracing contemporary innovation

Creative Validation

Inclusion in FRAME Biennial and the ALIENS program demonstrated recognition of:

High-quality production design as integral to performance art

Affective design's capacity to serve complex artistic visions

Cross-cultural collaboration enriching contemporary performance

Technical excellence in service of emotional and spiritual depth

Technical Specifications

Lighting:

Color mixing LED fixtures (full RGB spectrum control)

Programmable cues with manual override capability

DMX control for real-time adjustments

Spatial arrangement creating depth and intimacy

Sound:

Multi-channel playback system

Live mixing console for real-time control

Spatial audio positioning for immersive experience

Custom sound design integrated with existing scores/soundscapes

Performance Space:

Temperance Hall, Melbourne (intimate, atmospheric venue)

Audience proximity enabling deep connection

Flexible lighting positions for varied spatial effects

Key Insights: Affective Design in Live Performance

Design as Presence, Not Decoration

The audiovisual environment wasn't about something—it was something. An active participant in the performance, shaping experience in real-time.

Collaboration Requires Cultural Humility

Working with an artist whose practice is rooted in non-Western traditions required:

Listening more than asserting

Learning cultural contexts before making design choices

Honoring subtlety and restraint over Western tendencies toward spectacle

Allowing silence and emptiness as powerful design choices

Emotion is Data

Affective design isn't vague or subjective—it draws on:

Empirical research in color psychology and psychoacoustics

Cultural semiotics and archetypal associations

Performer feedback and audience response

Embodied experience and intuitive resonance

All of these are forms of data that inform design decisions.

Live Performance Demands Responsive Design

Unlike fixed media, live performance requires:

Real-time responsiveness to performer and audience energy

Balancing planned design with improvisational flexibility

Technical precision enabling spontaneity

Designer as co-performer, not just technician

Key Takeaways

Integrated production design across lighting, sound, color, and spatial relationships

Affective environments supporting emotional and spiritual transformation

Cross-cultural collaboration honoring Asian aesthetic principles in contemporary context

Live performance requiring real-time responsiveness and embodied design presence

Part of curated biennial celebrating otherness, queerness, and radical embodiment

Design as co-performer rather than backdrop or decoration

Mad Monk demonstrates that affective design isn't limited to digital interfaces or research labs—it can create profound experiences in live, embodied, performative contexts where light, sound, body, and presence converge.

All images © Cy Gorman 2026

Custom Interactive Installation and XR

Interactive AFFECTIVE Installation | Augmented reality prototyping

rendered 3D photogrammetry | sound design and composition

Custom Interactive Installation and XR

Interactive AFFECTIVE Installation | Augmented reality prototyping

rendered 3D photogrammetry | sound design and composition

Immersive/Interactive Dev for Tony Yap Co: MERGENESIS

#AR #3dScanning #InteractionDesign #InstallationDesign #LightingDesign #SoundDesign #ProductionDesign #CreativeDirection #Videography #PostProduction #360Video

Introduction

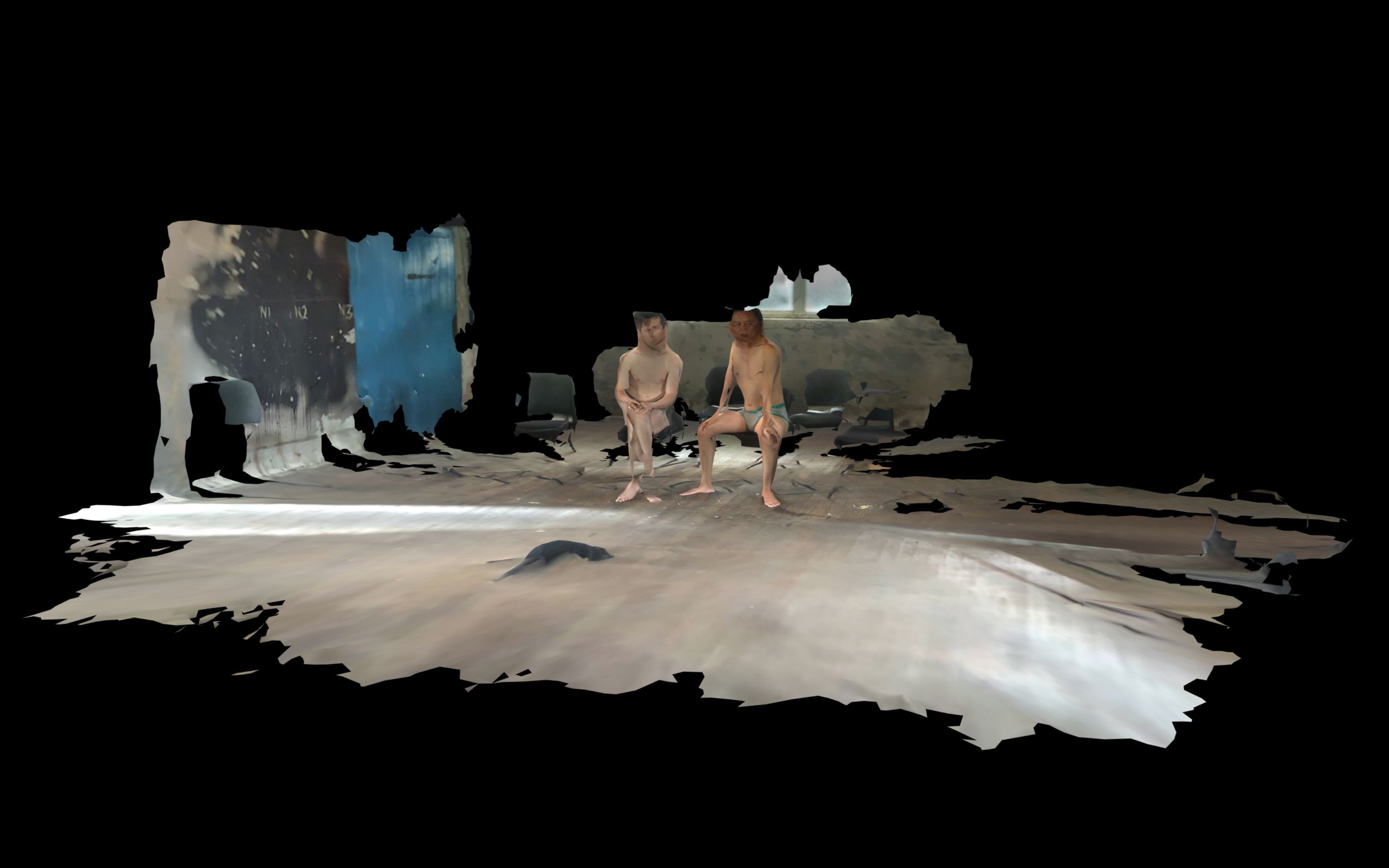

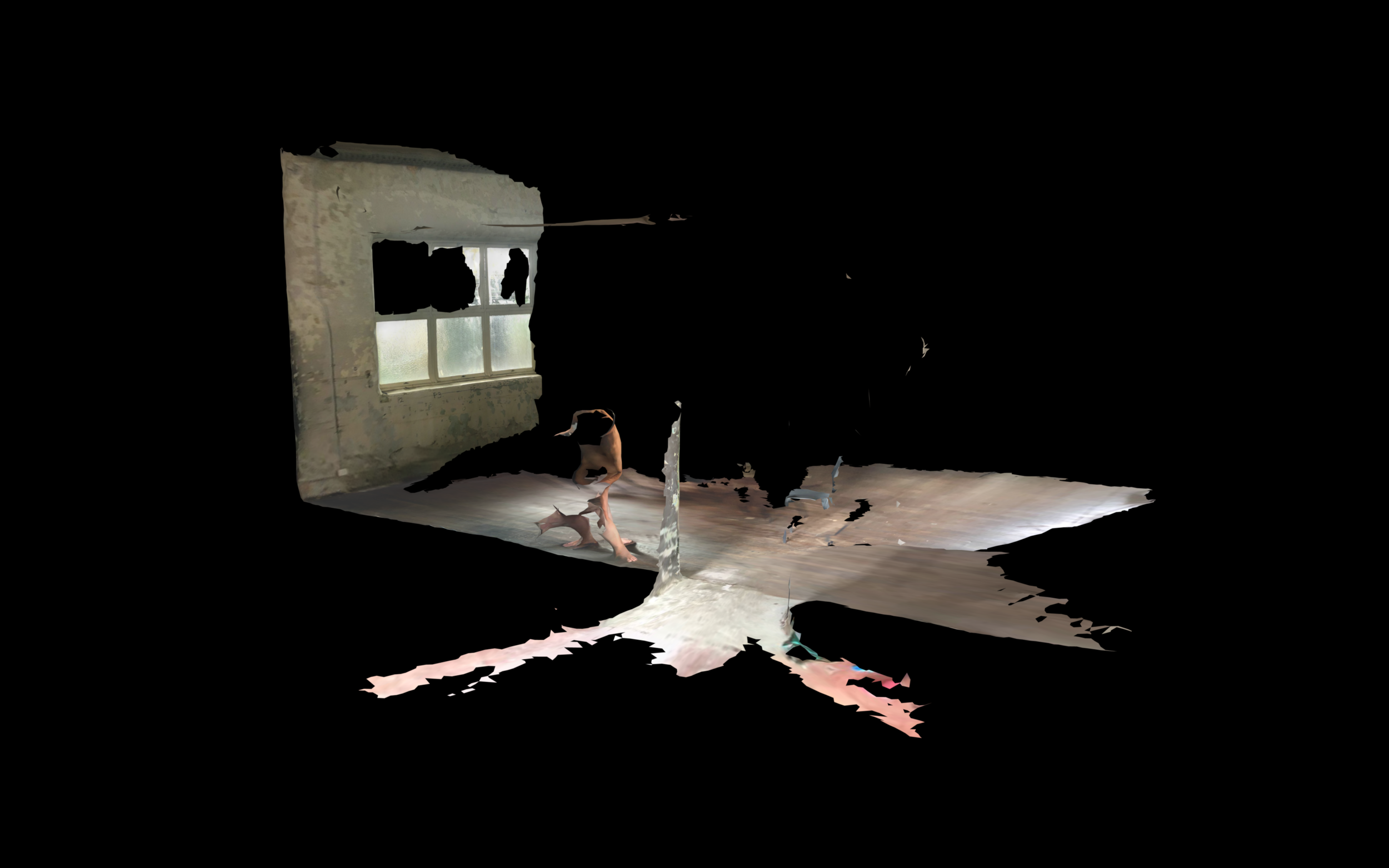

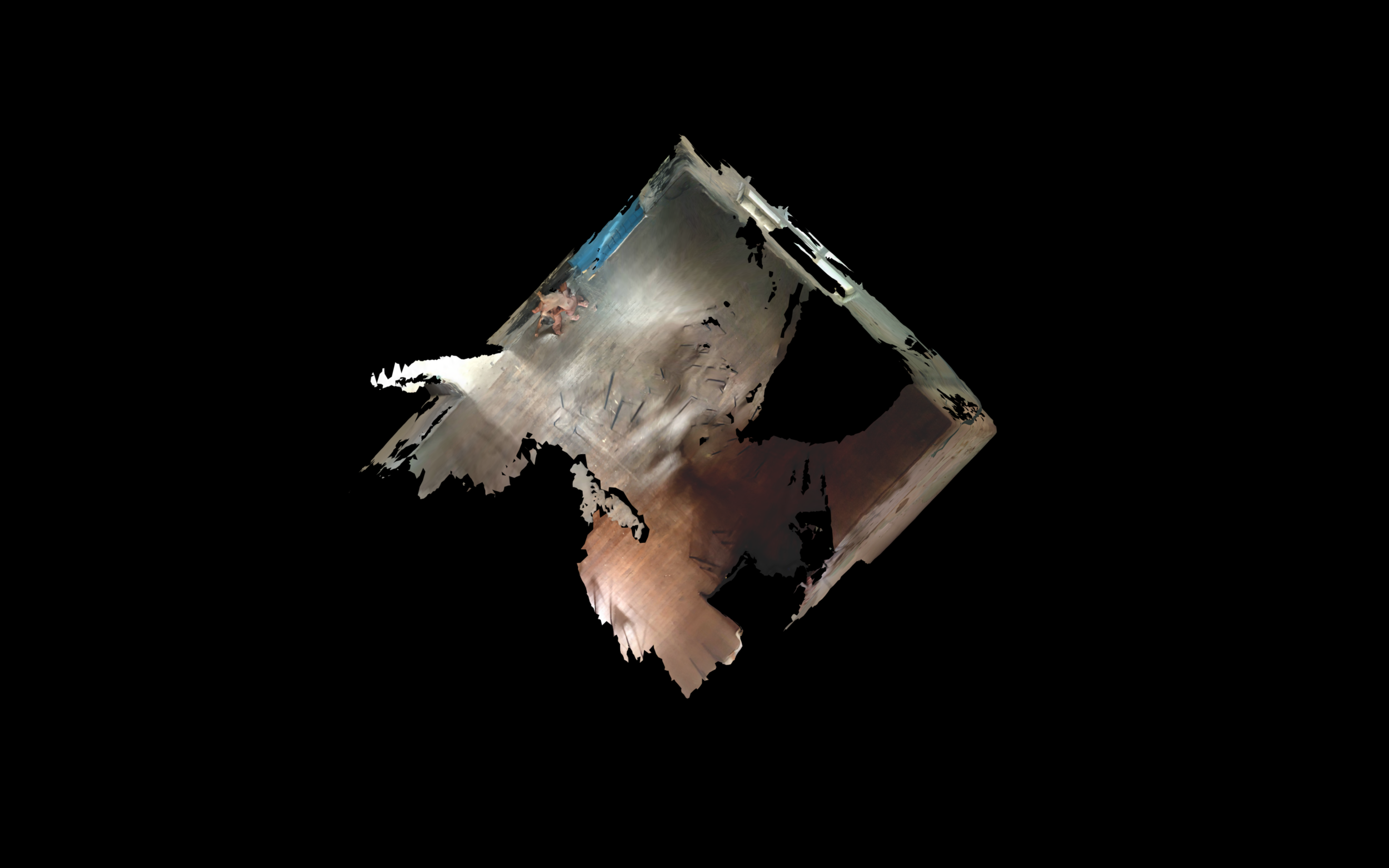

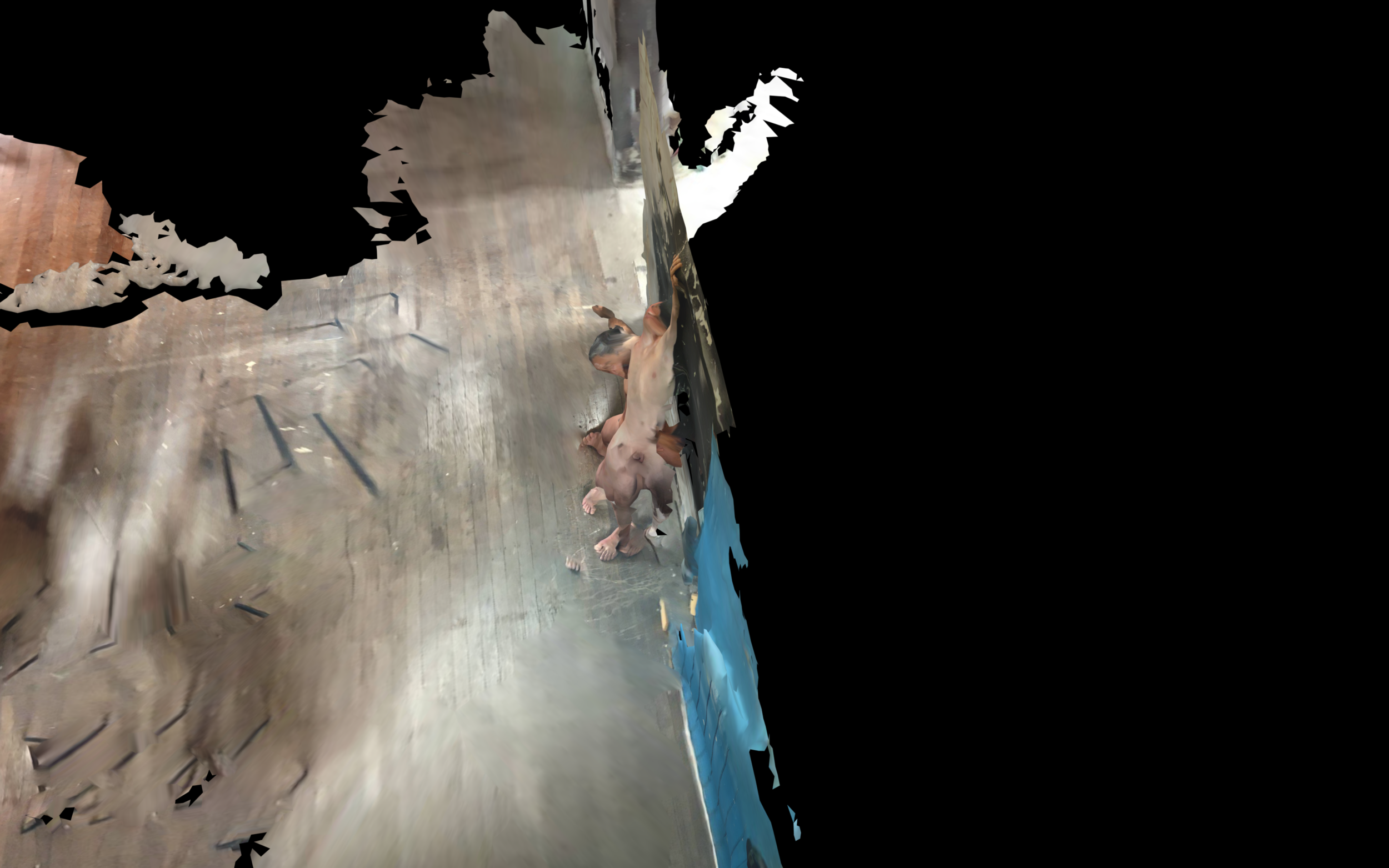

This two-part body of work was produced in collaboration with performance artist Tony Yap, and a guest artist, Brendan O’Connor, carried out at Abbotsford Convent, Melbourne, in January 2021 and post produced in 2022.

Part 1 Overview - Interactive/Performance/Installation/Environment

Part One of Mergenesis explores dynamic and responsive sound-colour resonance to inform and influence performance. In addition to performance, it showcases my custom built interactive light/sound installation and also my 360 camera cinematography and post production.

Creative Direction

Installation Design

Digital Media Production Design

3D Scanning and acquisition of virtual reality (VR) and augmented reality (AR) assets

3D post-production and design

Rendering and curation of digital environment selected stills

Production design thinking and planning for further iterative development including image/digital XR assets

Sound Design

Music Composition - ‘Holding Pattern’

Both video examples here were produced using 360 camera tech to acquisition both 360 video (for VR or interactive browser experience) as well as morphed 16:9 ratio. The combination of 360 cameras and post production for standard HD format produces a unique perspective on both object and environment. Both Spectrum 1 & 2 were recorded in 5.7k (VR) to be outputted at 4k resolution for screen. Here, they are a lower res MVP demonstration example.

Part 2 Overview - Lidar/AR/Virtual Environment Design

Part Two focuses on digitisation of affective performance motifs via XR Lidar post-production processing & Augmented Reality (AR) prototyping

Methods for design and production I utilised in this project are:

The practices and techniques I applied in Part 2 are:

Creative Direction

Digital Media Production Design

3D Scanning and acquisition of virtual reality (VR) and augmented reality (AR) assets

3D post-production and design

Rendering and curation of digital environment selected stills

Production design thinking and planning for further iterative development including image/digital XR assets

Sound Design

Music Composition - ‘Holding Pattern’

Music Composition

My focus for the following scenes are to depict the dualistic collapsing and expanding nature of virtualising representations of digital self, in dialogue with dual-masculinity as a singular entity non-binary entity.

It is important for me as an artist beginning to challenge and explore more deeply aspects of ‘masculinity’ and ‘masculine’ beauty when confronted with the void that technology presents to the human form, consciousness and nexus of co-existence across physical and digital space.

Scene 1

Scene 2

Scene 3

Scene 4

Scene 5

Scene 6

Scene 7

Scene 8

Scene 9

Scene 10

Music & Sound Design

Composition | production | Engineering

Publishing | Sync | music video

Music & Sound Design

Composition | production | Engineering

Publishing | Sync | music video

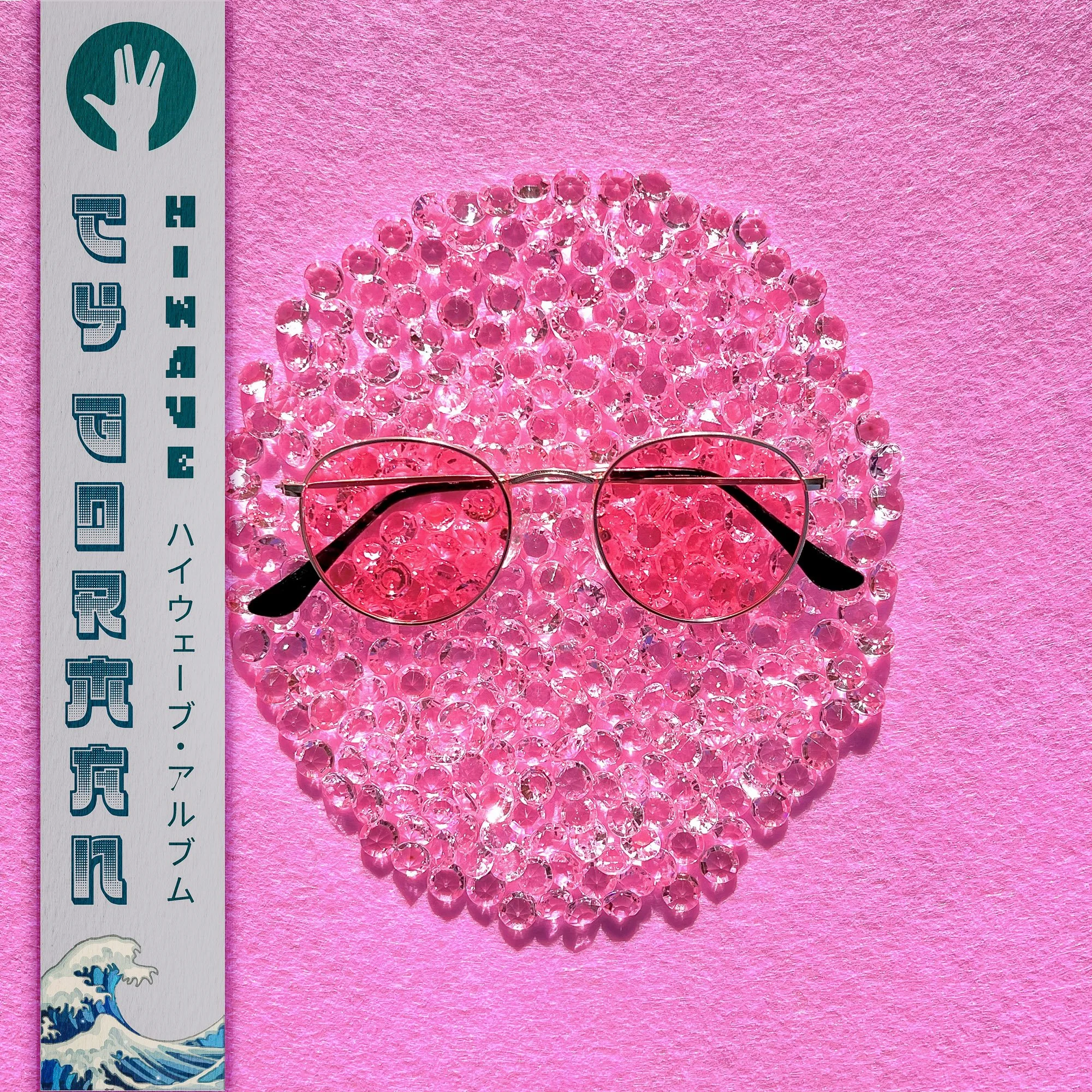

An intro to the Cy ‘musicverse’

Netflix Feature: One of my recent milestones was having my music licensed by Netflix – my song “Hiwave” was featured in the soundtrack of the Netflix series Heartbreak High (Season 2)netflixlife.com. Hearing my track play in a hit show was a thrilling moment and underscored the broad appeal of my sound in visual media.

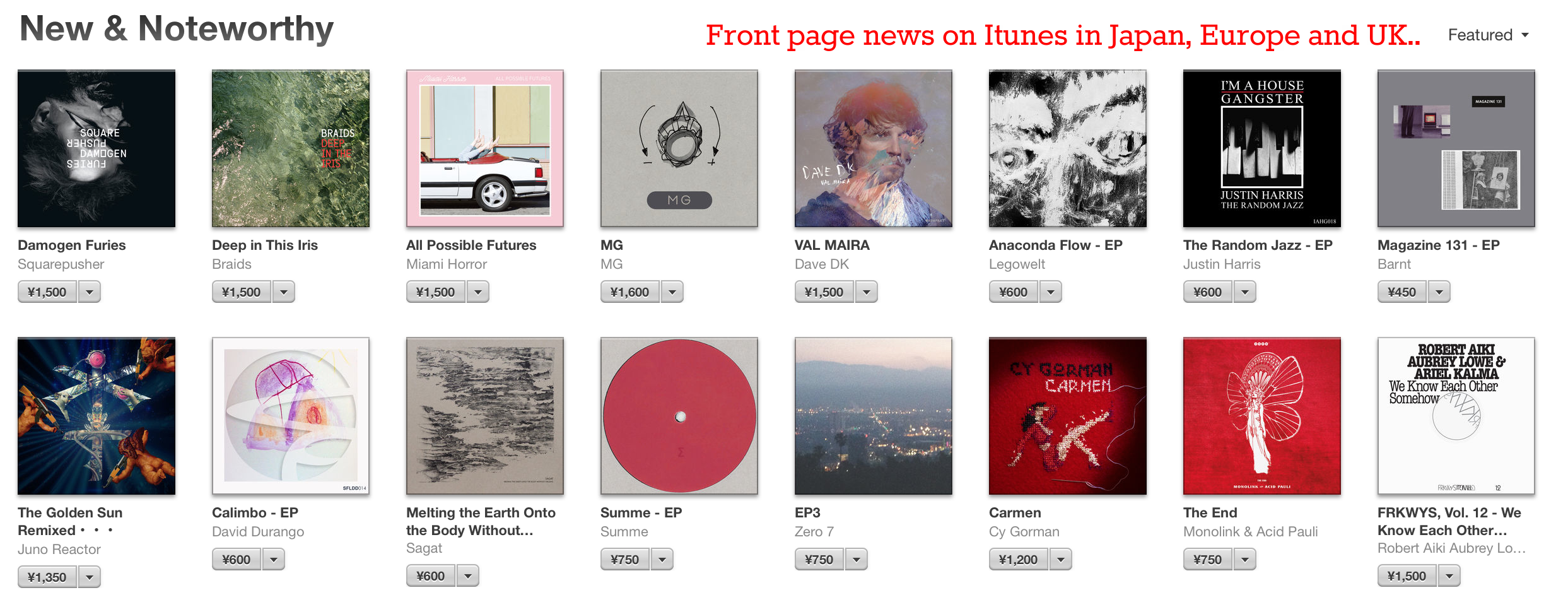

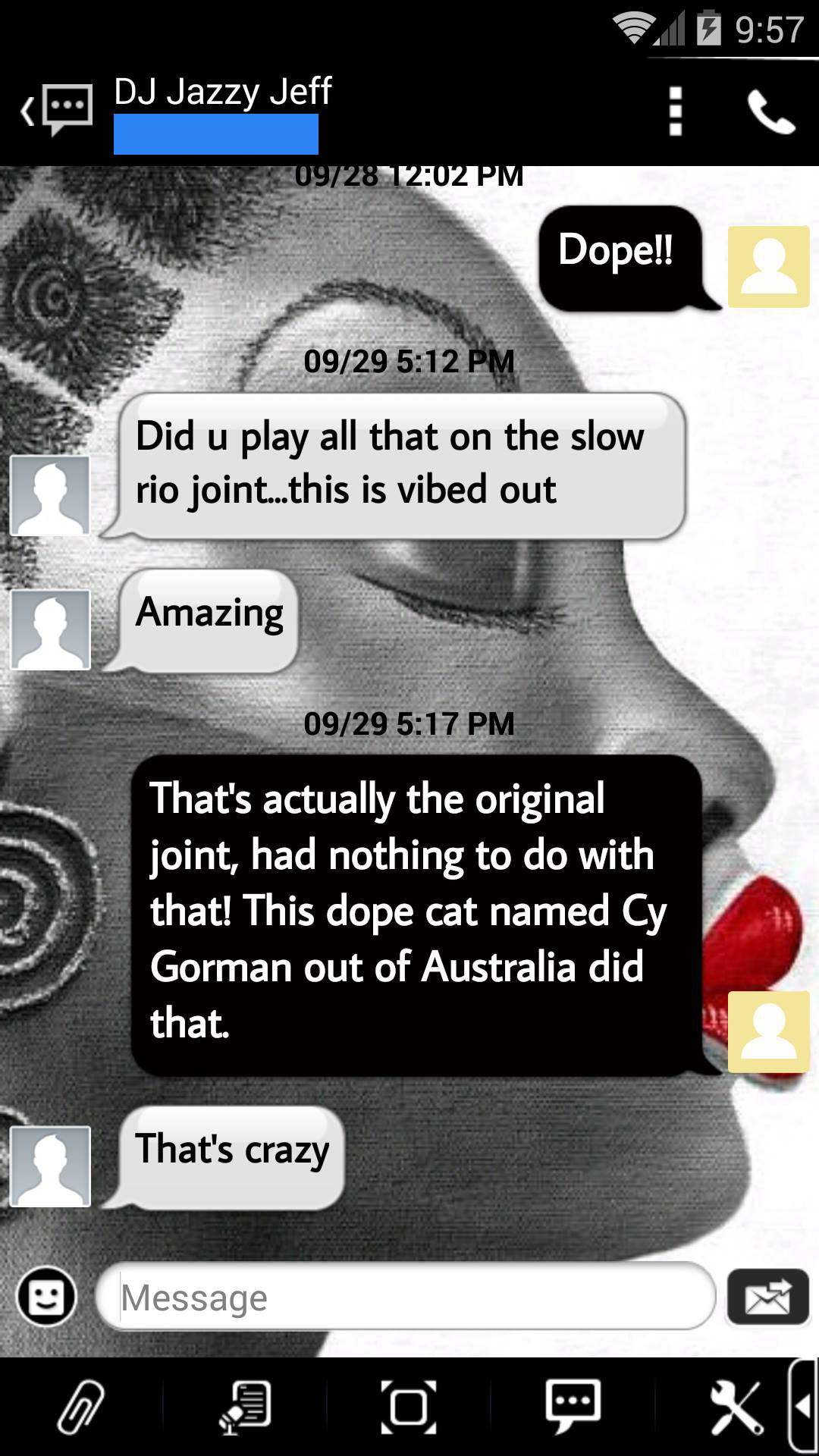

Critical Praise: Over the years I’ve been fortunate to earn praise from respected figures in the music world. As mentioned, Gilles Peterson and DJ Jazzy Jeff have both championed my workcygorman.bandcamp.com. I’ve also received props from L.A. tastemakers like Garth Trinidad of KCRW (who put my music on rotation), and top international experimental artists such as Kaitlyn Aurelia Smith have shown support for my sonic explorations.

Music Video Examples:

🎹 Cy Gorman Acoustic - Live 🎹

Published Works

Radio & Playlists: My tracks have traveled globally, finding their way onto radio stations and playlists across continents. I’ve enjoyed BBC Radio play in the UK and frequent spins on KCRW in the US, as well as rotation on Australia’s Triple J and worldwide platforms like Worldwide FM. Online, my songs have been showcased on the Bandcamp Weekly show and selected for Spotify’s Fresh Finds and Apple Music’s editorial playlists. It’s humbling to know that my home-brewed tunes can connect from Tokyo (InterFM) to Paris (Hotel Costes) and beyond.

Industry Highlights: Hiwave’s release opened some exciting doors. It was featured in USA’s world renowened Ghostly International’s Best of 2022 playlist, which was a huge honor as I’ve long admired Ghostly’s artist roster. I’m also grateful to have had my work embraced by fellow artists and DJs around the world – from the soulful crew at Melbourne’s Wondercore Island to international acts like Surprise Chef and support from labels behind acts like BADBADNOTGOOD and Jordan Rakei. Each nod of recognition motivates me to keep pushing my creative boundaries.

🌊 Previous Cy Gorman Records 🌊

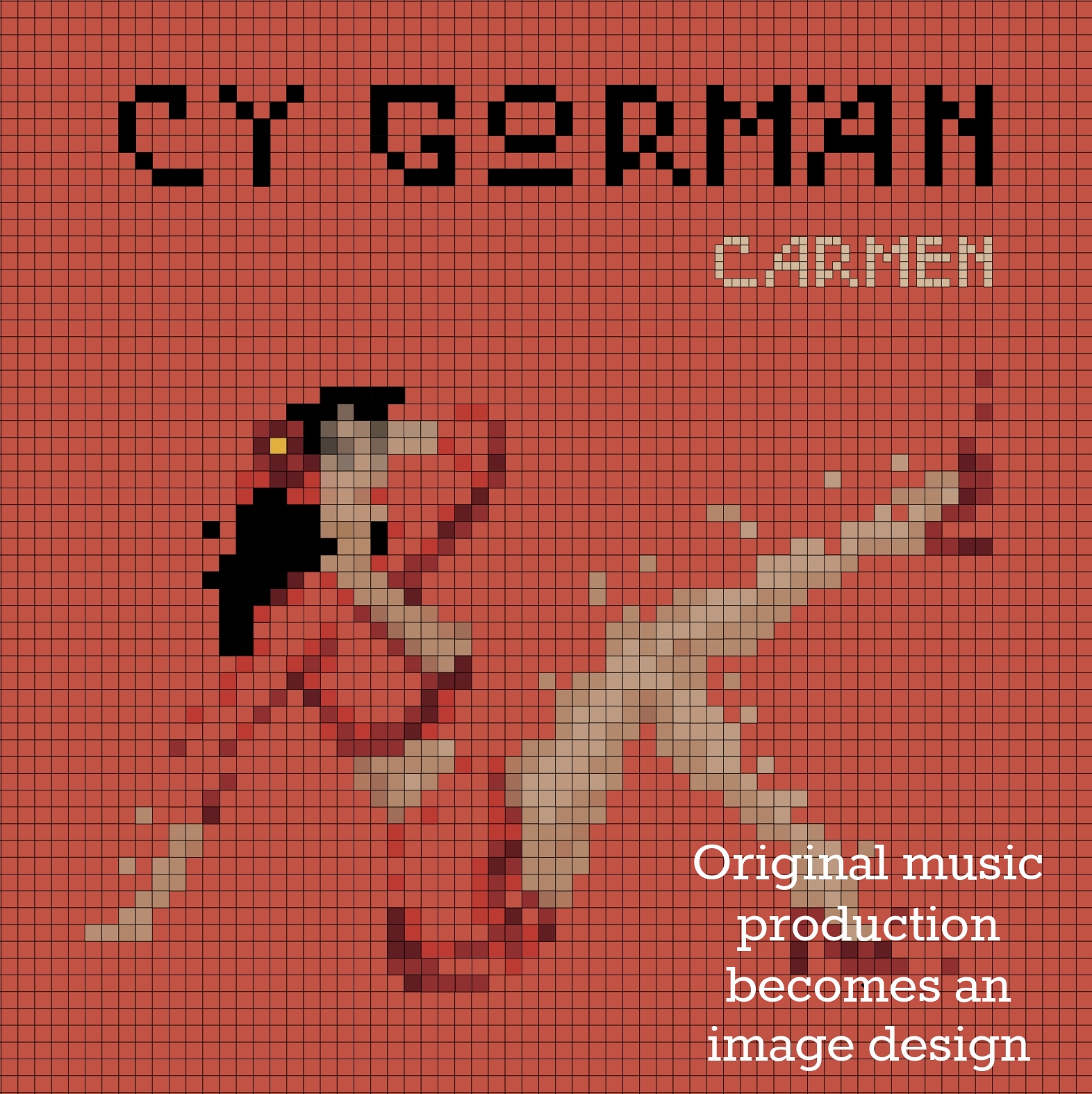

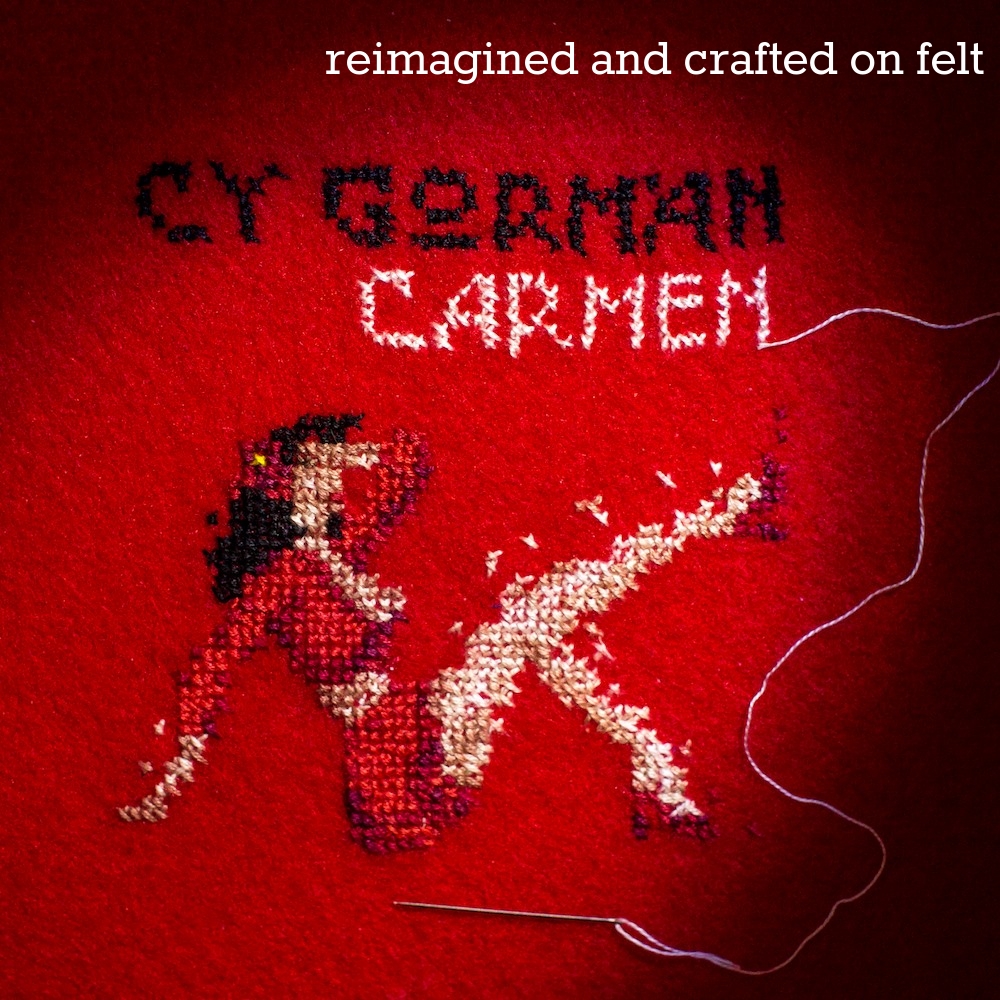

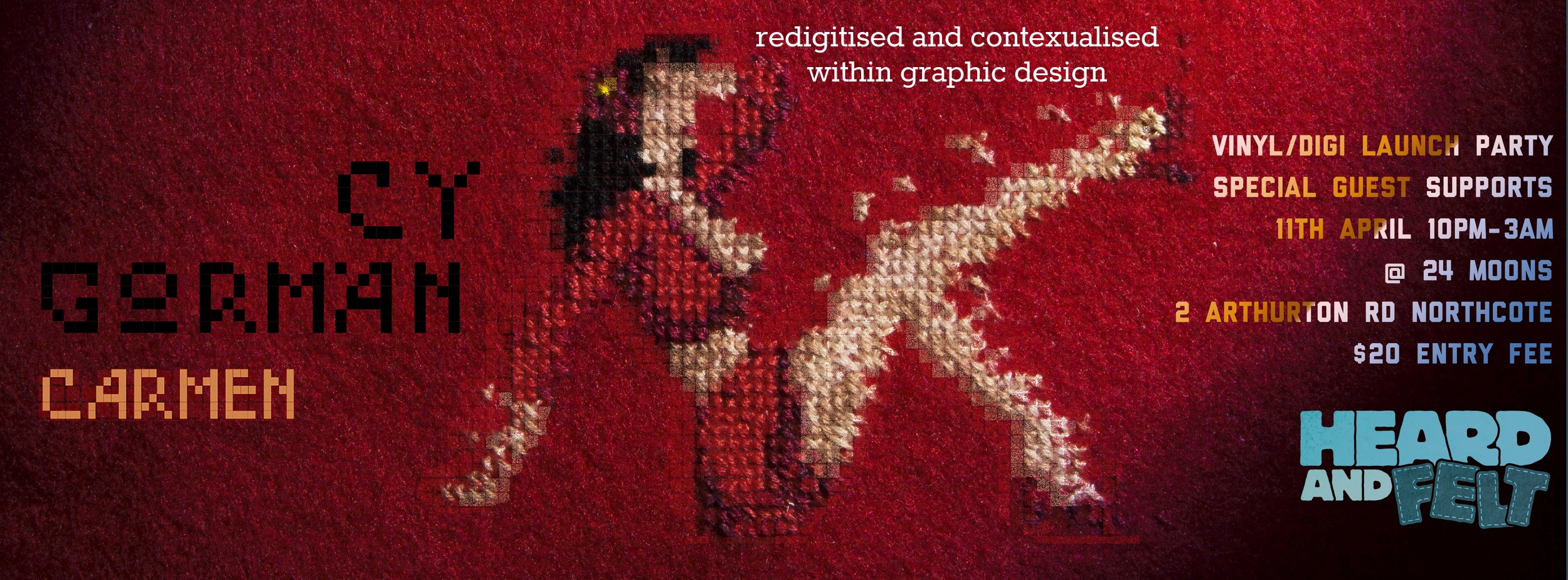

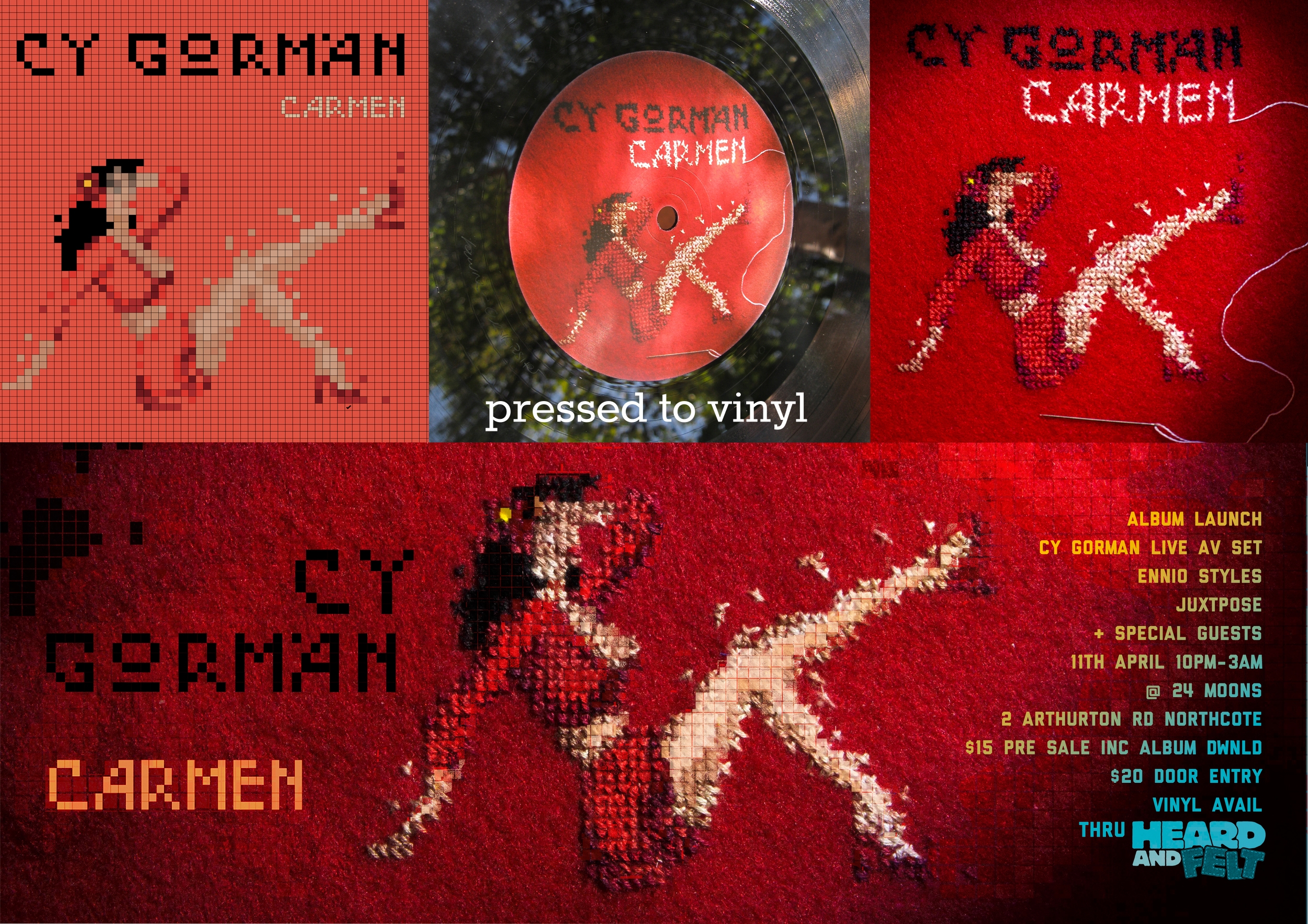

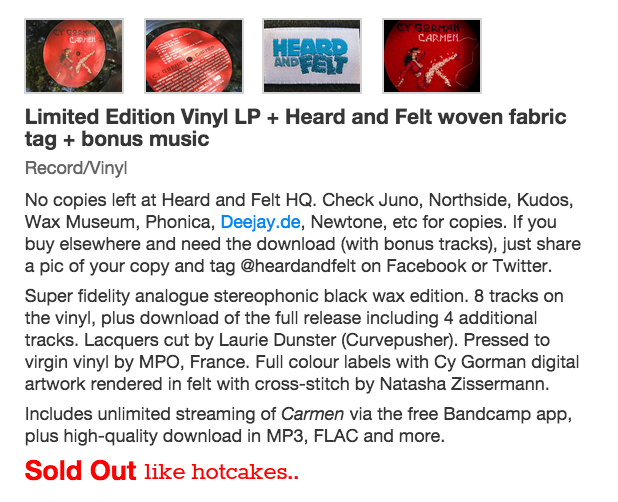

💃 CARMEN - ALBUM 💃

“‘A great artist ... Love the sweetness of The Wishing Well and the Daniel Crawford mix of course!’”

EP PRODUCER

Graphic Design/Visual ARTIST

COMPOSER

PRODUCER

RECORDING ENGINEER

MIX ENGINEER

Mix/Production Arrangements

Album Launch Insights

8 FOOT FELIX (Producer)

☠️ 'MAD ISLE' - ALBUM ☠️

Album Producer

Music and Rehearsal Director

Co-Writes

Mix/Production Arrangements

Additional Overdubs

Co-Arranger

Co-Mix Engineer

'MAD ISLE' - MUSIC Video Director/Maker

WHITELIGHT (Producer)

💌 L * V E - EP 💌

Album Producer

Rehearsal/RECORDING Director

RECORDING ENGINEER

MIX ENGINEER

MASTERING

Mix/Production Arrangements

Additional Overdubs

Co-Mix Engineer

CY GORMAN (Artist/Producer)

🦋 KUPU KUPU - EP 🦋

EP PRODUCER

ARTIST

COMPOSER

PRODUCER

RECORDING ENGINEER

MIX ENGINEER

Mix/Production Arrangements

SAN LAZARO (Remix Producer)

👾 REMIX - SINGLE 👾

PRODUCER

REMIX ARTIST

PRODUCTION ARRANGER

PROGRAMMING

ADDITIONAL MUSICAL OVERDUBS

RECORDING ENGINEER

MIX ENGINEER

“Blending the futuristic and the classic, Cy Gorman’s remix of San Lazaro’s sultry ballad Ladridos is a mesmerizing meeting of two worlds. A a dark, sparse and completely analogue tango ballad twisted into a deeply bent piece of symphonic electronica, synthesizing elements of cumbia, deep house and dub.”

LOS CHARLY'S (Remix Producer)

🍏 REMIX - SINGLE 🍏

CO-PRODUCER

PRODUCTION ARRANGER

PROGRAMMING

ADDITIONAL MUSICAL OVERDUBS

RECORDING ENGINEER

MIX ENGINEER

“London/Venezuelan troupe Los Charly’s Orchestra handpick their favourite artists to deliver a series over genre-smelting remixes. The result is an instant party that stretches from the Latin-flecked jazz, to G-funk, breaks, house, disco and loads more. Highlights include the tight slick chops of Cy Gorman & Ennio Styles LA beat flavoured take on Rediscovering The Big Apple”

CY GORMAN (Artist/Producer)

🆒 COOL CHANGE - SINGLE 🆒

PRODUCER

PRODUCTION ARRANGER

PROGRAMMING

ADDITIONAL MUSICAL OVERDUBS

RECORDING ENGINEER

MIX ENGINEER

MASTERING

TNG (Artist/Collaborator)

🪘 ABOUT TIME - SINGLE 🪘

CO-WRITE

GUEST VOCALIST

HOOK WRITER

ADDITIONAL MUSICAL OVERDUBS

CY GORMAN (Artist/Producer)

👹 CARMEN EXTRAS - EP 👹

EP PRODUCER

ARTIST

COMPOSER

PRODUCER

RECORDING ENGINEER

MIX ENGINEER

Mix/Production Arrangements

DANIEL CRAWFORD

RDJ BY CY GORMAN

📼 REMIX - SINGLE 📼

CO-PRODUCER

ARTIST

CY GORMAN

🌕 SOUL MIRAGE - EP 🌕

EP PRODUCER

ARTIST

COMPOSER

PRODUCER

RECORDING ENGINEER

MIX ENGINEER

Mix/Production Arrangements

The Spirit of the Black Dress

Music PRODUCER

Recording ARTIST

Programming/Sampling

RECORDING ENGINEER

MIX/Mastering ENGINEER